At Datumo, our Human Resources (HR) team sift through dozens - sometimes hundreds - of resumes every day, arriving in all kinds of unstructured formats: PDFs, DOCX files, even images. Manually reviewing and extracting information from these documents is a slow, tedious task and more often than not error-prone. We needed a smarter, faster way to turn that unstructured resume data into clean, structured, and searchable information - fueling reliable recruiting analytics and freeing up valuable time for more strategic work.

To solve this, we designed and implemented a resume parsing API and processing pipeline powered by Natural Language Processing (NLP) techniques and Large Language Models (LLMs). Combined with BigQuery, this system delivers scalable, automated extraction of structured data from unstructured resumes - transforming, laying the groundwork for smarter, faster talent operations.

1. Why we want to automate Resume Parsing & Processing?

Reducing Bias in Resume Screening

Resumes come in many shapes and forms - PDFs, creative designs, scanned documents or even plain text. This wide variety introduces challenges in processing unstructured resume data, especially when the structure or design affects visibility of key information.

Humans tend to favor visually polished or professionally designed resumes, often subconsciously associating visual appeal with competence. This introduces bias into the resume screening process. In contrast, our resume parsing system, powered by Large Language Models (LLMs) and structured prompts, extracts key information from resumes and consolidates it into a standardized, tabular format - regardless of layout or design.

By automating this parsing step, we reduce the influence of subjective formatting preferences and support more consistent, equitable human review based on skills, experience, and qualifications.

Speed & scale

In today’s recruiting environment, time and scale play a major factor as companies can receive hundreds, sometimes thousands of resumes for a single open role, it is like searching for a needle in a haystack. Manual processing of large-scale resume data is no longer feasible, as recruiters can spend hours or days just reading through resumes, usually with limited consistency or insights into the decision-making process.

To handle bulk processing of applications efficiently, a more automated solution is necessary. Our resume parsing API processes incoming resumes continuously - extracting structured, relevant data from all resumes and enabling near real-time filtering, searching, and shortlisting.

By combining automated resume parsing with cloud-based data pipelines, we can process thousands of resumes per day without adding headcount, while ensuring that each resume is parsed consistently and presented in a standardized format for review. This is a major improvement over traditional manual screening.

Consistency & accuracy

For any HR decision-making process to be effective, the accuracy and consistency of extracted resume data is absolutely crucial. Whether it's a job title, employment dates, or listed skills, even small inconsistencies can mislead recruiters and lead to incorrect candidate ranking, filtering, or false negatives.

We designed our system to accurately extract data from unstructured data formats - regardless of layout, formatting, or structure. This includes validation rules for:

- Date ranges - detecting gaps, overlaps, or invalid formats

- Role normalization - mapping diverse job titles into a consistent taxonomy

- Skill detection - identifying both explicit and implicit skills from different resume sections

With high-quality, structured resume parsing output, recruiters can trust that they’re making decisions based on complete and consistent data - not formatting quirks or copy-paste errors. This creates a level playing field, increases recruitment efficiency, and ensures the foundations are in place to introduce more advanced screening tools in the future.

Better downstream use

Automating resume parsing isn't just about speed - it's about creating long-term value by turning unstructured information into structured, machine-readable data. Once resume content is parsed and normalized, it becomes significantly easier to build useful tools and workflows around it.

With structured resume parsing, we unlock a wide range of downstream applications, including:

- Candidate dashboards - allowing recruiters to explore and filter large applicant pools quickly

- Resume filtering & shortlisting - based on years of experience, skills, education, or location

- Job description match scoring - comparing extracted resume content against job requirements

- Talent mapping - grouping or tagging candidates for future opportunities

- Resume summarization - making it easier for humans or machines to evaluate candidates at a glance

While we are predominantly focused on data extraction and normalization, our structure easily allows for the integration of future innovations - from AI-driven resume evaluations and job matching engines to automated candidate insights. It’s a forward-looking investment that transforms how talent is sourced and managed.

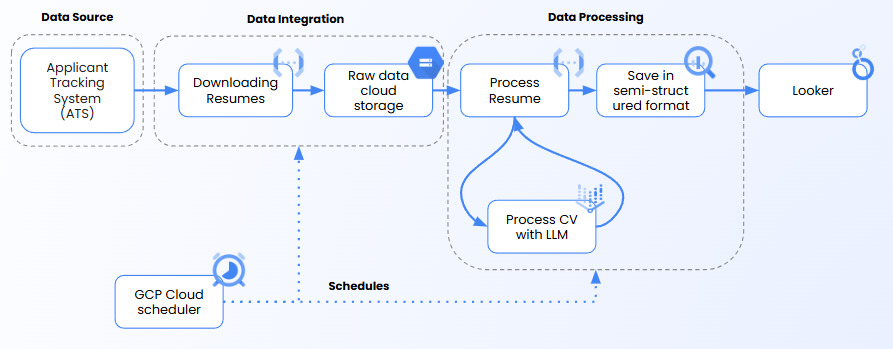

2. System Architecture: From Applicant Tracking System (ATS) to BigQuery

Our architecture connects directly to our Applicant Tracking System (ATS), where candidates submit resumes in various formats - including PDFs, DOCX files, and even images. The wide variety of resumes presents challenges in resume parsing, requiring a combination of traditional extraction and advanced Optical Character Recognition (OCR) to handle all cases reliably.

Step 1: Resume Download

A scheduled job (via GCP Cloud Scheduler) triggers resume downloads using Google Cloud Function from the ATS. These files are stored in a raw format in cloud storage, where we differentiate between formats:

- PDFs

- DOCX

- Images

To avoid unnecessary processing and duplication, we also store resume metadata, including file hashes and timestamps. This allows us to detect and skip duplicate files efficiently, preserving compute resources and ensuring the accuracy of downstream data pipelines.

Step 2: Resume Processing & LLM Parsing

Once files are stored in cloud storage, a resume processing function is triggered. This step handles:

Data extraction

After detecting the file type using its MIME type, we apply format-specific logic to extract the resume text content. This is a critical stage of the resume parsing pipeline, as it converts resumes from diverse sources into machine-readable formats suitable for further processing.

a) PDF and DOCX

We use document parsers to extract raw text from resumes submitted as PDFs or Word documents. In addition to basic text, we also preserve useful metadata like embedded URLs e.g., LinkedIn profiles, portfolios.

b) Images and Scanned Documents

For image-based resumes - including photos, scans, and screenshots - we apply Optical Character Recognition (OCR) using OpenCV. This allows us to convert unstructured visual data into structured text, even in cases where the resume was poorly scanned, stylized or even made into a GIF.

Because resumes often vary in design, layout, and structure, robust resume extraction is essential to maintain parsing accuracy across different formats. This step ensures that every candidate’s resume - regardless of how it was submitted - can be processed and passed to the next stage in a normalized, consistent format.

Resume Parsing via LLM

Once we have clean, extracted resume content (text), it’s formatted into a structured prompt and sent to a Vertex AI endpoint.

Unlike traditional rule-based resume parsing tools, which rely heavily on layout patterns or keyword matching, LLMs excel at interpreting unstructured data - including unformatted resumes or those written in free text. This allows us to extract meaning and context from resumes that would otherwise be difficult to parse using conventional methods.

To ensure consistent output, we use prompt engineering to define a clear schema. The LLM is instructed to return a strict JSON structure with resume attributes like:

- Full name

- Contact information

- Work experience

- Education

- Skills

- Certifications

This approach allows our resume parsing API to produce a structured output that can be validated, stored, and queried reliably.

Result Validation

Even though the LLM returns structured JSON, we do not assume the output is always complete or correct. Large Language Models can occasionally omit fields, return incorrect types, or introduce inconsistencies - especially when processing highly unstructured resumes.

To ensure data quality and consistency, we validate every response using a strict Pydantic schema. This includes:

- Field presence - Required fields like full_name, work_experience, skills, and education must be included.

- Data types and structure – Nested objects such as job history must follow a defined format (e.g., a list of objects with title, company, start_date, end_date).

- Value validation – Date ranges must be structured and accurate, empty strings or null values are flagged, and bad sections are rejected.

Using Pydantic allows us to perform structural and semantic validation early in the pipeline, before the data reaches BigQuery or any downstream system. This reduces the risk of bad data affecting dashboards, filters, or decision-making logic.

Resumes that fail validation are excluded from the batch, logged for later inspection, and optionally routed to a retry queue or manual review process. This validation step ensures that only high-quality, structured resume data is stored, which increases confidence in the entire automated screening process.

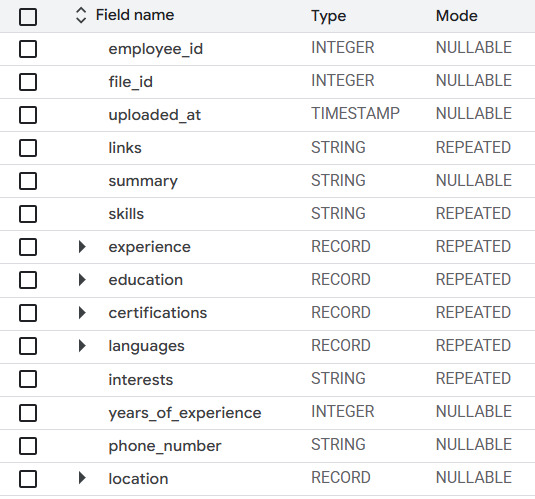

Data loading to BigQuery

Once each resume has been parsed and validated, the results are loaded into BigQuery in batches. The dataset is added to a structured table with a predefined schema, as shown below.

Storing resume data in BigQuery gives us:

- Scalability - capable of handling large-scale resume data across thousands of applicants

- Flexibility - SQL-based access to explore and filter structured resumes

- Integration - seamless connection with Looker, enabling internal stakeholders to visually analyze and compare candidates

While our current system focuses primarily on resume parsing and normalization, this structured format supports downstream use such as:

- Resume filtering and shortlist creation

- Resume screening dashboards

- Candidate comparison and tracking

- Potential future integration with AI-driven resume evaluation, job description matching, or resume summarization tools

In summary, once resumes are ingested into BigQuery, they become reliable, structured data - enabling faster and more consistent decision-making within the recruitment process.

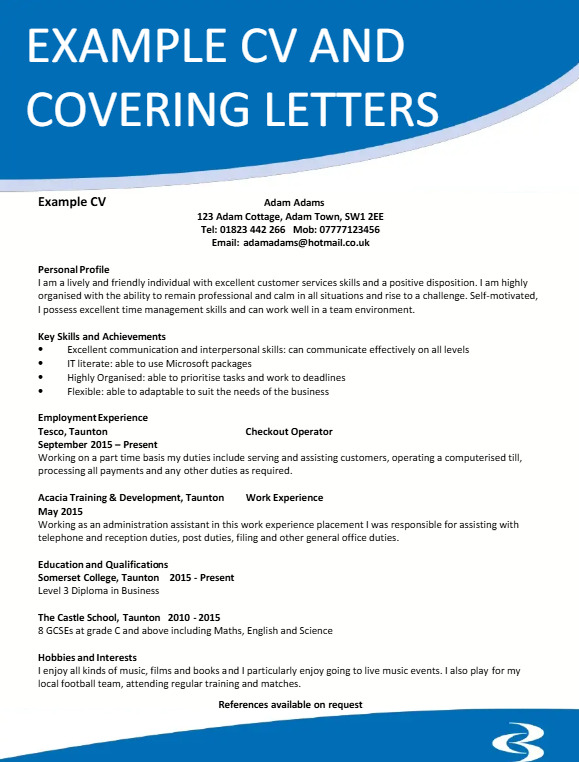

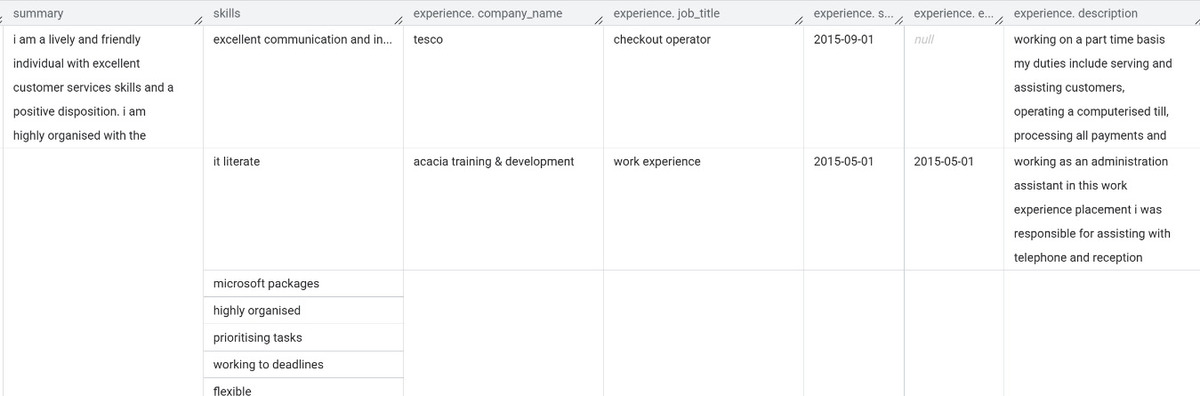

To demonstrate the pipeline in practice, the following section presents a simplified end-to-end example of a real resume submitted through the ATS:

View the full document on Scribd

This file is downloaded to our cloud storage bucket, classified as a PDF, and passed through the parsing pipeline. The resume content is converted into structured JSON using an LLM. After validation, the final result is stored in BigQuery as presented in the picture below.

3. Challenges in Resume Parsing & How We Overcame Them

Developing an automated resume parsing pipeline using LLMs presented several technical and operational challenges. Below we break down the key issues we faced and the solutions we implemented to make our system more robust, reliable, and scalable.

a) Inconsistent & Poor Input Quality

Challenge:

Resumes arrive in every imaginable format - from professionally structured PDFs to scanned resumes with creative layouts. Even screenshots, GIFs, and photos are regularly received. Some resumes also contain multiple languages or non-standard encodings, which further complicate data extraction.

Our Approach:

- We implemented a multi-stage preprocessing pipeline that detects file types and applies the correct extraction method (e.g., PDF parser, DOCX reader, OCR for images).

- For images and scans, we use OpenCV combined with OCR to reliably extract text, even from low-quality inputs.

- For multilingual resumes, we introduced language detection and selectively route resumes to appropriate prompts or models that support the detected language.

- If a resume cannot be parsed due to corruption, unsupported formats, or extraction failures, it is routed to a fallback queue for manual review, and an alert is triggered to ensure timely intervention.

This preprocessing ensures that no resume is discarded prematurely, and even imperfect inputs can often be recovered and parsed effectively.

b) Prompt Engineering Complexity

Challenge:

Getting consistent, structured output from an LLM depends heavily on how the prompt is designed. Early prompts frequently returned inconsistent results - missing fields, improperly nested structures, or verbose outputs.

Our Approach:

- We iterated on the prompt design dozens of times, experimenting with phrasing, structure, and examples to guide the LLM’s behavior.

- We added explicit instructions and schema definitions in the prompt, including examples and format constraints (e.g., "return in strict JSON with these fields…").

c) Data Validation & Error Handling

Challenge:

Even with a solid prompt, LLMs can produce output that is structurally valid but semantically flawed. For example:

- Confusing job titles with company names

- Incorrect or inconsistent date ranges

- Omission of key fields

- Broken JSON or type mismatches

Our Approach:

- We enforce a Pydantic schema on the LLM output to validate structure, data types, and field completeness.

- We implemented custom validation rules, such as checking chronological order of employment, flagging overlapping date ranges, or clarifying inconsistent job titles.

By treating the LLM as an intelligent but fallible component, we built guardrails that prevent bad data from contaminating downstream systems. In case of bigger hallucinations, we always keep humans in the loop.

d) Bias & Fairness in Resume Parsing

Problem:

One key reason for adopting LLM-based parsing was to reduce human bias - but it’s equally important to ensure that automated systems don’t inadvertently introduce new biases based on model training data.

For example, a LLM might:

- Implicitly favor certain universities or companies in its interpretations

- Overemphasize job titles or brand names

- Misinterpret career gaps or non-linear paths

Our Approach:

- We audit parsed data regularly to check for systematic biases or unintended patterns.

- Most importantly, our system focuses strictly on factual extraction - no evaluative judgments or interpretations are made by the LLM.

4. Privacy & Data Protection

Handling resumes means handling personal data - names, contact information, employment history, and more. Ensuring that this data is processed securely and in compliance with privacy standards is of paramount importance.

Secure Cloud Infrastructure

All resume parsing and data processing happens entirely within our Google Cloud Platform (GCP) environment. We leverage GCP services such as:

- Cloud Storage for raw file storage

- Vertex AI for model inference

- BigQuery for structured data storage and analysis

These services comply with Google’s Cloud Service Terms, which include strong guarantees about data confidentiality and usage.

No LLM Training on Candidate Data

When processing sensitive information like resumes, data privacy is our highest priority. Our system is designed so that all inputs to the LLM are processed in a secure, isolated environment and are never stored beyond the immediate request. There are no feedback loops or mechanisms that send candidate data back to model training or fine-tuning pipelines.

Strict data segregation policies ensure your inputs are never shared with external services. These operational controls form the backbone of our privacy safeguards.

Complementing these measures, Google Cloud’s AI/ML Services contractually prohibit the use of customer data for training or fine-tuning models without explicit customer consent. This contractual commitment reinforces the privacy guarantees built into our infrastructure.

Together, these technical and legal safeguards ensure that your data remains private, isolated, and used solely for the intended AI processing - no data retention, no sharing, no model training.

5. Summary: Reflections & Takeaways

In building our automated resume parsing pipeline, we set out to tackle a major challenge in recruiting: extracting structured, reliable data from unstructured, inconsistent resumes at scale. By leveraging modern NLP techniques, LLMs (via Vertex AI), and cloud infrastructure (GCP, BigQuery), we replaced slow and biased manual review with a scalable, auditable, and automation-friendly system.

What We Achieved

- Objective, Fairer Screening

By focusing on the content of resumes rather than their visual formatting, we reduced visual bias and promoted more equitable candidate evaluation. - Significant Time Savings

Automated parsing reduced the manual burden on recruiters, allowing them to prioritize higher-value tasks like candidate engagement and decision-making. - Structured, Queryable Data

Resume data is now cleanly structured and stored in BigQuery, enabling downstream use in filtering, analytics, dashboards, and future AI applications.

What Made It Work

- Preprocessing Is Critical

Reliable parsing begins with high-quality input. Our system detects file types and applies appropriate extraction logic - using OCR for images and scanned documents - to ensure clean, normalized text reaches the LLM. - LLMs Require Careful Prompting

While LLMs are powerful at interpreting unstructured data, they are only as good as their instructions. Structured prompts, schema definitions, and example-guided prompting were essential to produce consistent JSON outputs. - Validation Is Mandatory

Even with well-designed prompts, hallucinations, and inconsistencies can occur. We use Pydantic to enforce strict schema validation - checking for missing fields, incorrect types, invalid date ranges, and other issues. A human-in-the-loop review process remains essential for maintaining trust in edge cases or where quality must be guaranteed. - Modular, Maintainable Architecture

The pipeline is broken into distinct stages: file ingestion, preprocessing, parsing, validation, and storage. This modular design allows independent updates, simplified debugging, and greater long-term flexibility. - Security and Privacy Built-In

All processing occurs within our secure GCP environment. Resumes are never used for model training or shared externally, aligning with strong data protection standards and ensured by Google Cloud Service Terms.

Challenges We Addressed

- Poor and Inconsistent Input Quality

Scanned images, screenshots, low-quality documents, and multilingual resumes required robust OCR, fallback logic, and dynamic routing based on language or file type. - Prompt Engineering Complexity

Ensuring the LLM returned valid JSON, which required extensive iteration and testing of prompt formats, schemas, and constraints. - Semantic Errors in Output

Even structurally valid JSON could contain flawed data - such as misclassified roles or illogical date ranges. Validation logic was critical for flagging and correcting these issues. - Bias and Interpretability

We limited the LLM’s role to factual extraction rather than evaluation, and regularly audited output for fairness to ensure no new biases were introduced by the automation process.

Curious how your resume holds up in an AI-powered pipeline?

Go ahead - test our parsing system. Submit your CV and experience recruiting that’s fast, fair, and format-proof.

Apply now & put us to the test!

Want to automate talent acquisition chaos at scale?

Book a free 30-min consultation - see how AI turns unstructured CVs into structured, searchable talent data.

Final Takeaway

Large Language Models (LLMs) offer a powerful solution for handling resume parsing - but only when used thoughtfully. Success requires more than just calling an API. It involves engineering for validation, observability, maintainability, and fairness. By designing with these principles in mind, we turned LLMs from an experimental tool into a reliable system that integrates seamlessly into our hiring pipeline and supports better decision-making across the board.

.png)

.avif)