MLOps: The Key to Success in AI/ML

MLOps, the practice of managing the lifecycle of AI/ML projects, is critical for effectively implementing artificial intelligence. As machine learning platforms grow increasingly complex, teams face challenges in managing data, maintaining model quality, and deploying models in production environments. The lack of consistent processes and tools leads to inefficiencies and scaling difficulties. MLOps offers a solution by standardizing and automating key stages, which enhances the efficiency of AI/ML teams and ensures smoother project execution.

Modern Challenges in AI/ML Management

Contemporary AI/ML projects encounter numerous challenges, from production data acquisition and processing to monitoring the performance of deployed models. Managing model versioning, tracking their parameters and metrics, and ensuring experiment reproducibility require advanced tools. Additionally, rapidly changing data can lead to model performance degradation in production, emphasizing the need for continuous monitoring and updates. MLOps platforms effectively address these issues, minimizing errors and reducing the time for model deployment process.

Azure MLOps as a Comprehensive Machine Learning Environment

Azure Machine Learning stands out as a versatile platform supporting MLOps capabilities. It offers essential features such as data, experiment and model management, automated model training, and scalable deployments in production environments. Integration with code repositories (e.g., GitHub) and monitoring tools like MLflow enables efficient tracking of metrics and model versions. Furthermore, Azure MLOps projects facilitate continuous monitoring of model performance and rapid adaptation to data changes, making it an ideal choice for teams aiming to enhance efficiency and reduce risks in AI/ML projects.

Main challenges of MLOps

There are many potential challenges when trying to integrate machine learning operations practices. Let’s dive deeper into what those are and how to overcome them.

Data: Poor-quality production data (e.g., noisy, missing, or incorrect values) can lead to inaccurate model training, testing, and predictions. Garbage in, garbage out – models trained on bad data yield poor results in production. To prevent it, we can monitor if our data quality has decreased by setting up a Data Quality signal in data drift monitoring.

Experiments: Keeping track of experiments, including model configurations, data versions, and results, can become overwhelming as teams scale. When we lose track of previous model and data versions and the results they produced, we can’t be sure if the project is improving and actually going in the right direction - we end up wasting time and duplicating efforts. To prevent it, be sure to use tools like MLflow or AzureM to log configurations, metrics, and artefacts. Moreover, agree on methods for evaluating and comparing trained models’ performance. Be sure to put your method to work by evaluating the model each time its new version is trained. Be sure to log the hyperparameters and data used each time, to understand their impact on model performance and identify optimization opportunities. That way we can track the progress and manage machine learning experiments.

Model deployment: Ensuring the deployed model meets real-life performance and latency requirements is crucial. Choosing the right machine learning architecture is the key here. We need to decide whether to go with batch or online deployment depending on our priorities and expected traffic. Also, a general good practice, especially with online deployments is not to overload the inference pipeline with preprocessing steps.

Managing Datasets in MLOps with Azure Storages

Effective data management is the foundation of every successful Machine Learning project and, in a broader context, a critical component of MLOps practices. MLOps, or Machine Learning Operations, focuses on streamlining and automating the lifecycle of machine learning models—from development and deployment to monitoring.

In this context, Azure Blob Storage serves as a central component for managing data workflows, ensuring the availability, organization, and security of large datasets. Below, we highlight the key features of Blob Storage that make it indispensable for both ML projects and comprehensive MLOps pipelines.

How does Blob Storage ensure scalability and flexibility for Azure MLOps Platform?

In the ever-evolving MLOps landscape, the volume and variety of data is growing exponentially as teams iteratively refine models and incorporate new sources to increase accuracy and insight. The Azure Blob Storage service is designed specifically to meet these demands, offering seamless scalability to accommodate data sets of any size - from gigabytes to petabytes - while maintaining performance and reliability. Compatibility with a wide range of data formats, such as Parquet, Avro and ORC, not only minimizes storage load, but also speeds up data processing by optimizing read and write operations.

Azure Blob Storage is distinguished by its ability to support the coexistence of raw data, indirectly processed data and model-ready data sets within a unified storage solution. This flexibility simplifies data management and provides seamless transitions between different stages of the MLOps pipeline, increasing productivity and operational efficiency.

What's more, the service provides tiered storage options, enabling organizations to effectively manage costs by aligning data storage with usage patterns. Deep integration with the Azure platform ecosystem - such as Data Factory, Synapse Analytics and Azure Machine Learning - further streamlines workflows, enabling the creation of robust data pipelines that can be customized to meet the dynamic requirements of modern MLOps.

How does Azure Blob Storage address security and access management for sensitive data in MLOps?

In the rapidly evolving field of MLOps, where data volume and complexity grow at an unprecedented pace, Azure Blob Storage provides a reliable and scalable solution for managing datasets of all sizes. Blob Storage is specifically designed to handle unstructured data, offering the flexibility and performance needed to support the data-driven demands of MLOps workflows.

Azure Blob Storage delivers seamless scalability, enabling storage from gigabytes to petabytes without compromising on performance. Its support for a wide range of file formats, such as Parquet, Avro, ORC, and JSON, enhances processing efficiency by optimizing storage space and improving data access speeds. This versatility ensures that raw datasets, processed data, and model-ready files can coexist within a unified storage system, simplifying data organization and retrieval. Additionally, Blob Storage integrates seamlessly with Azure’s ecosystem, including Azure Machine Learning, Azure Data Factory, and Azure Synapse Analytics, enabling robust end-to-end pipelines. Its built-in lifecycle management policies allow for automatic tiering of data across hot, cool, and archive tiers, ensuring cost-efficiency by aligning storage expenses with data access patterns.

Blob Storage also prioritizes security and compliance, offering features such as encryption at rest and in transit, role-based access control (RBAC), and integration with Azure Active Directory. These capabilities ensure that sensitive data remains secure while adhering to industry regulations like GDPR and HIPAA.

With its scalability, flexibility, and integration with Azure’s suite of tools, Azure Blob Storage empowers organizations to build resilient and efficient MLOps platforms that can adapt to the dynamic requirements of modern machine learning workflows.

Versioning datasets and automating the process with Azure ML

Dataset versioning is a key aspect of working with data in Azure Machine Learning projects. This allows you to track changes in the data, reproduce the results of experiments, and ensure consistency throughout the model lifecycle. Azure Machine Learning offers tools that simplify this process - from manually logging datasets in notebooks to automation using YAML components and integration with services such as EventGrid.

#Import necessary libraries

from azureml.core import Dataset, Workspace

from azure.ai.ml import MLClient

from azure.ai.ml.entities import Data

from azure.ai.ml.constants import AssetTypes

from azure.identity import DefaultAzureCredential

import mltable

# Define paths to data files (e.g., CSV files in Azure Blob Storage)

path1 = 'https://xyz.blob.core.windows.net/data/1234/table_data/2024-06-01/table1.csv'

path2 = 'https://xyz.blob.core.windows.net/data/1234/table_data/2024-07-01/table2.csv'

# Load Azure ML workspace configuration

workspace = Workspace.from_config()

# Retrieve the default datastore

# (optional, not used directly here but can be used for uploading local data)

datastore = workspace.get_default_datastore()

# Create and register the dataset as a tabular dataset in Azure ML

dataset_table = Dataset.Tabular.from_delimited_files(path=[path1, path2])

dataset_table.register(

workspace=workspace,

name='table_dataset_1234',

description='Tabular dataset for data processing',

create_new_version=True

)

# Alternatively: Use MLTable to define the tabular dataset

paths = [

{"pattern": "https://xyz.blob.core.windows.net/data/1234/table_data/2024-06-01/table1.csv"},

{"pattern": "https://xyz.blob.core.windows.net/data/1234/table_data/2024-07-01/table2.csv"}

]

# Create an MLTable from the defined paths

ml_table = mltable.from_paths(paths)

ml_table.save("./tabular_dataset_1234")

# Initialize the Azure ML client

ml_client = MLClient.from_config(DefaultAzureCredential())

# Define a data asset in Azure ML

my_table_data = Data(

path="./tabular_dataset_1234",

type=AssetTypes.MLTABLE,

description="Tabular dataset for line 1234",

name="tabular_dataset_1234"

)

# Register the data asset in Azure ML

ml_client.data.create_or_update(my_table_data)

# <component>

$schema: https://azuremlschemas.azureedge.net/latest/commandComponent.schema.json

name: register_dataset

display_name: Register a new version of dataset

type: command

inputs:

datastore:

type: string

dataset_path:

type: string

code: ./pipeline_components

environment:

azureml:custom-env@latest

command: >-

python dataset.py

--datastore ${{inputs.datastore}}

--dataset_path ${{inputs.dataset_path}}

# </component>

With YAML definitions, such as the register_dataset example, you can precisely define the data registration process: specify the inputs (e.g., the location of the datastore and file paths), the runtime environment and the command that will register the new data in Azure Machine Learning. This process can then be used in pipelines or triggered automatically.

Automation can be achieved by integrating EventGrid with Azure Storage. For example, when a new CSV file is uploaded to Azure Blob Storage, EventGrid can trigger a register_dataset component that automatically registers the new data version. Combined with Python code that can be used in notebooks, this creates a scalable solution to easily manage data and its versions.

This approach saves time and ensures that each experiment uses accurately defined and recorded versions of datasets, which is essential for creating reliable and reproducible ML models.

Automation and Organization of Research Processes

Azure Machine Learning Studio enables comprehensive tracking of experiment results, including model parameters, test outcomes, and configurations, and integrates seamlessly with MLflow to enhance experiment management. MLflow allows users to log parameters, metrics, and outputs in a structured and consistent manner, enabling seamless model monitoring in any Azure MLOps project. It also supports model versioning, making it easier to compare configurations and reproduce experiments, ensuring transparency, reproducibility, and efficiency in managing machine learning workflows.

Here's a tutorial how you can check model statistics:

Log Metrics and Parameters.

During an experiment, you can use MLflow to log parameters and metrics. For example:

import mlflow

from sklearn.metrics import mean_squared_error

def main():

mlflow.start_run()

# train your model

mse_nn = mean_squared_error(y_test, y_pred_nn)

metrics = {"mean_squared_error": mse_nn}

# save metrics

mlflow.log_metrics(metrics)

mlflow.end_run()View Statistics in Azure ML Studio.

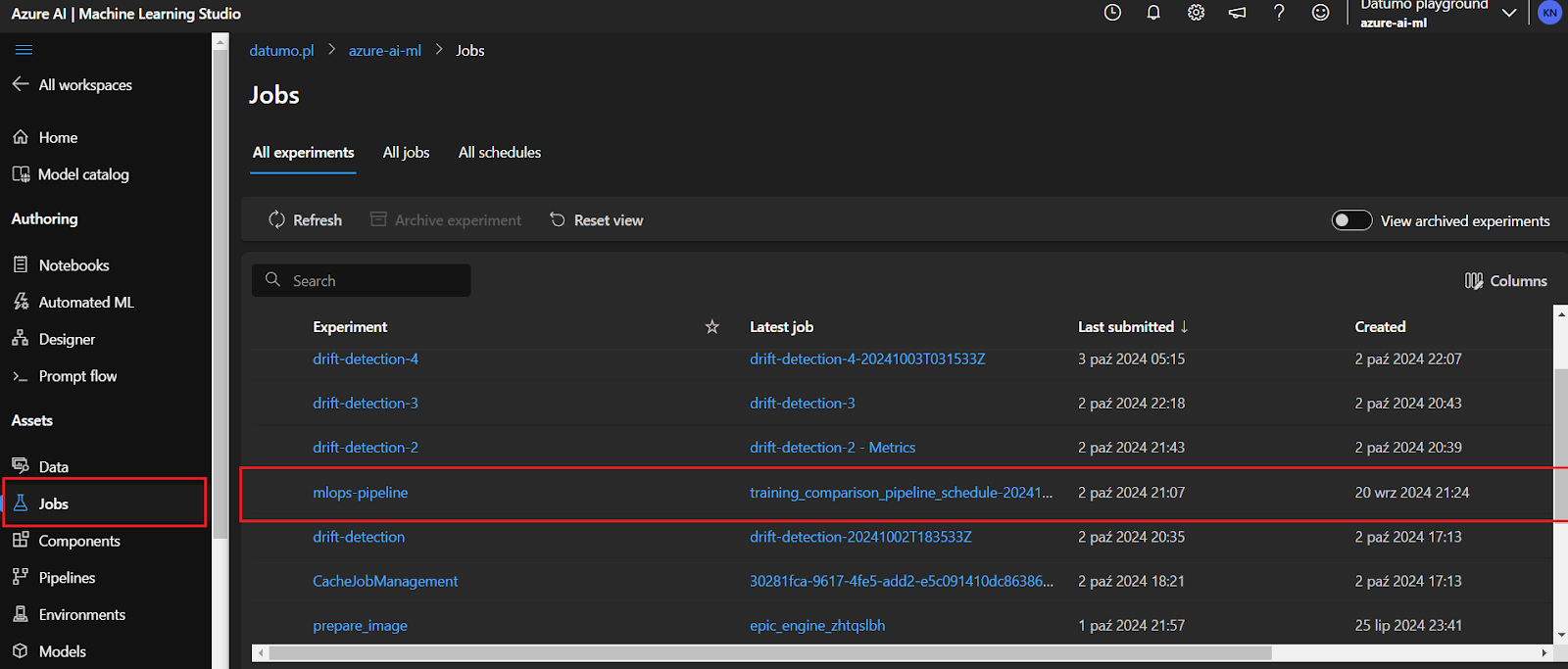

After logging into your Azure MLOps project, navigate to the Azure Machine Learning Studio workspace, open the "Jobs" section in the menu on the left side, and select the relevant experiment.

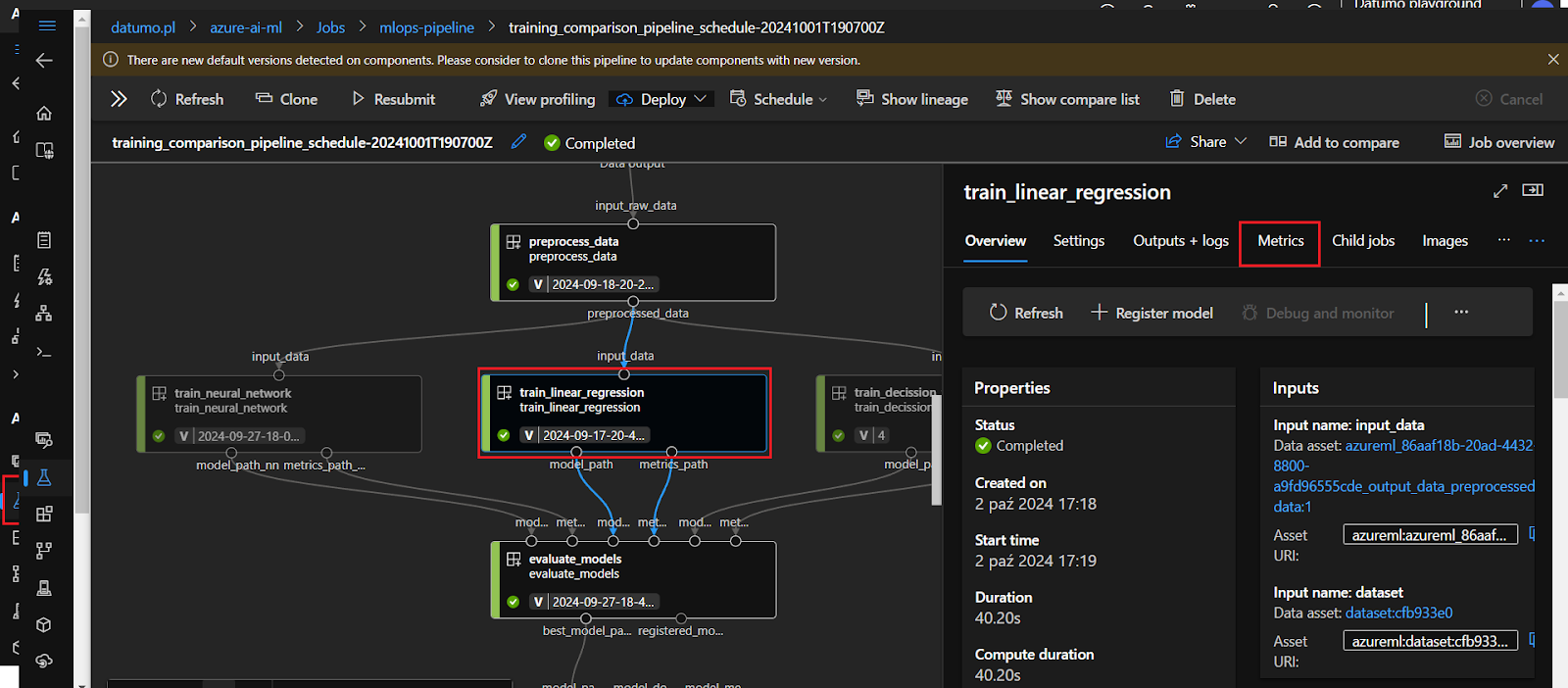

After opening the Job that you want to investigate, select the component where you implemented metrics. Finally, in the menu on the left side, open the tab Metrics.

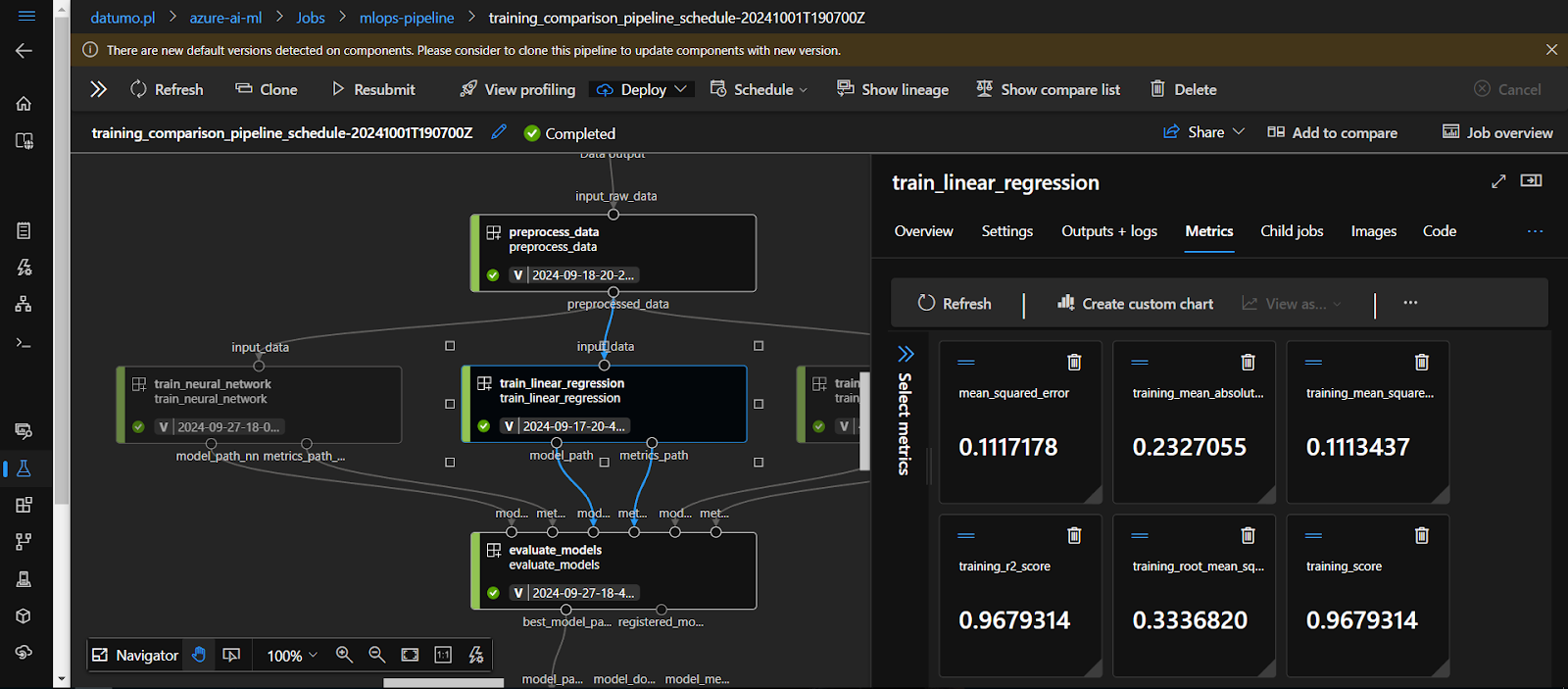

You'll see logged metrics like accuracy, loss, or any custom metrics you defined, along with parameters such as learning rate or batch size.

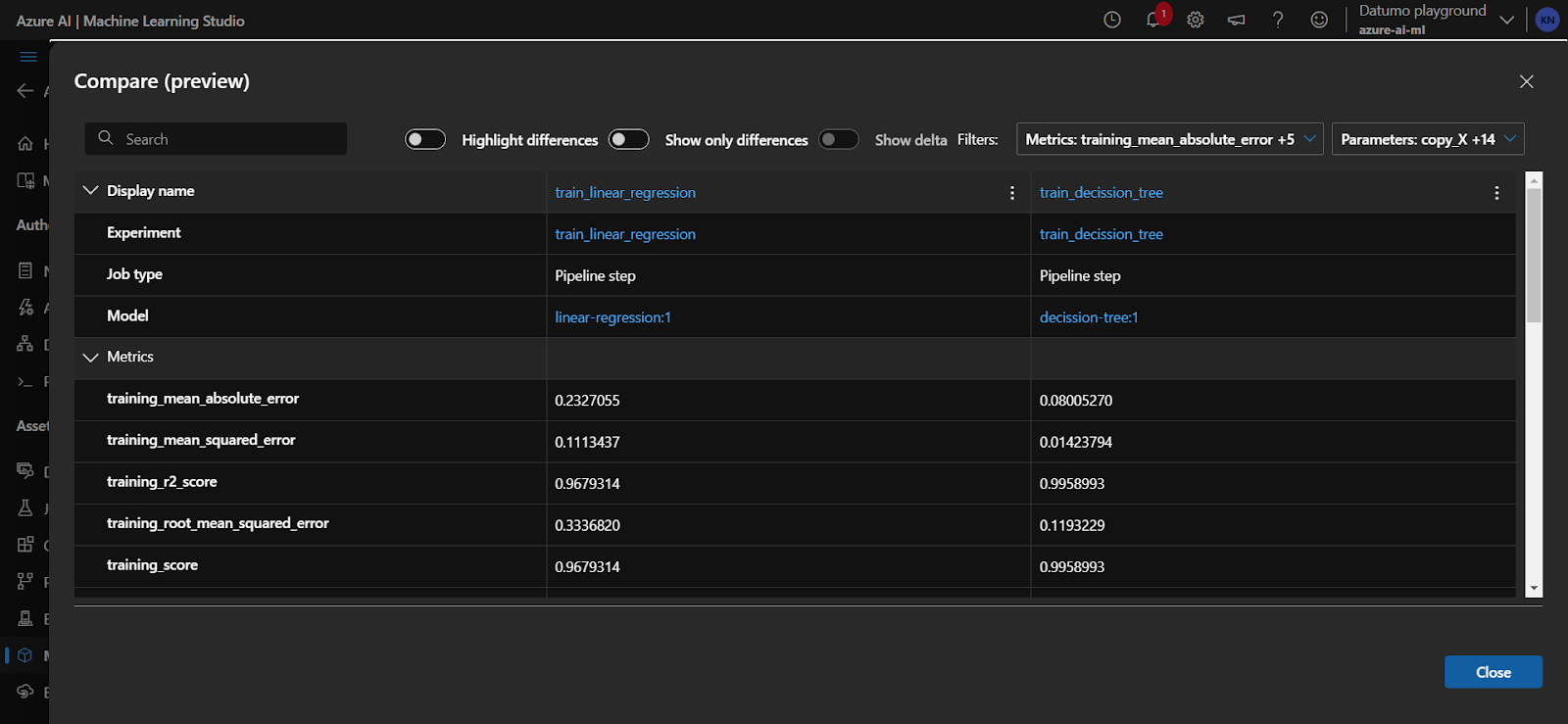

Azure MLOps platform provides methods that make it easy to compare different machine learning models. In the Models tab, you can select specific model runs and use the Compare > Add to compare list option to include them in a comparison list. If metrics were logged during the creation of a component using the mlflow.log_metrics(metrics) command, this data can be viewed in a table format and offers a clear and readable format for direct model performance comparison.

Compare iterations and visualize metrics over time.

MLflow allows you to compare different runs of the same experiment to analyze how parameter changes affect performance to choose optimal learning solution. For instance:

- In the ML Studio interface, select multiple runs and view side-by-side comparisons of metrics such as accuracy or loss.

- Plot the accuracy metric over multiple iterations to observe trends.

Export and Analyze Logs.

You can also export logged data for further analysis. For instance, downloading metrics as a CSV file enables custom visualization using tools like Pandas or Matplotlib.

import pandas as pd

data = pd.read_csv("experiment_logs.csv")

data.plot(x="epoch", y="accuracy", kind="line")MLflow provides its own user interface to view experiment logs. If you have logged parameters and metrics using MLflow, you can access the MLflow Tracking UI.

Resource and Model Iteration Management

Azure ML Studio supports efficient management of resources and model iterations through integration with popular code repositories like GitHub and Azure Repos. This enables the creation of reproducible experiments, which are crucial for teamwork and conducting research aligned with best practices.

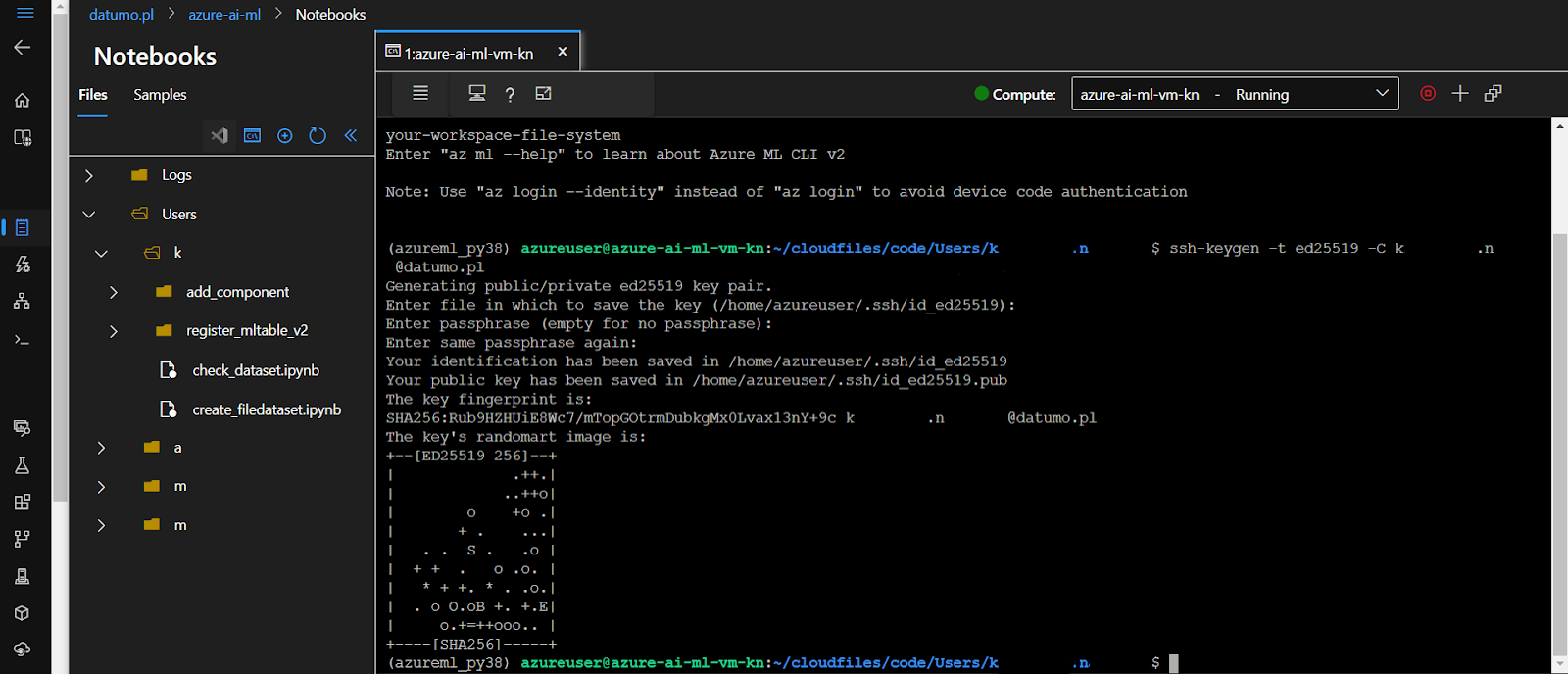

Version control in Azure ML is similar to regular Git workflows. To pull code from a repository, you can use the terminal available in Azure ML Studio. The terminal provides access to a full Git client, allowing you to clone repositories and work with Git commands via the CLI. You can open the terminal by navigating to the Notebooks tab and clicking the terminal icon.

Before cloning a repository, you must generate an SSH key and add it to your Git account.

Azure Machine Learning simplifies tracking code from Git repositories by automatically logging metadata during experiments. This includes details like the repository URL, branch name, and commit hash. This data is stored with each experiment, enabling users to trace results back to the exact version of the code used. You can check properties in the Jobs page.

MLOps Tutorial: CI/CD in MLOps workflow

After the data sources and pipelines are set up, we need to make sure they can be used to train and deploy the production model, as well as create predictions on a regular, scheduled basis. In this Azure mlops tutorial, we'll utilise CI/CD pipelines to facilitate machine learning lifecycle: from model training pipeline, model validation pipeline, model deployment process and monitoring machine learning solutions.

In previous sections we've built reproducible machine learning pipelines with Azure Machine Learning. Now, with Azure DevOps we can automate the processes of building, testing, deploying, and getting predictions from models on AzureML. Machine learning models typically are improved with time, and new, better versions are created. After making updates to any aspect of the machine learning project—such as the code, data, features, or model architecture—it is a good practice to have a system in place that automatically handles retraining the model and deploying the improvements.

Invoking the endpoint with the deployed model is yet another good practice to keep in place as a smoke test or sanity check. That ensures that the basic functionality of the model works as expected before proceeding with using the model in production.

The goal is to have a system in place that automates retraining and deploying the model to ensure improvements to the code, data etc. are effectively integrated. Such a machine learning platform allows data scientists and machine learning engineers to focus on high-value tasks such as model design and experimentation.

For purposes of this example, let’s focus in particular on the changes to the code that trigger retraining and redeployment. Let’s examine Azure DevOps (or GitHub CI/CD pipelines) that automate this process when there is a change to the code in the form of pull request.

Prerequisites

- Azure DevOps account and a project within your organisation (or alternatively, GitHub Actions configured for your project).

- GitHub repository with your ML project linked to Azure DevOps (or alternatively, an Azure DevOps repository).

Azure DevOps set up

- Connect Azure DevOps with AzureML using Service Connection.

Service Connection allows to securely connect Azure DevOps to AzureML and other external services.

In order to set up Service Connection on Azure DevOps, go to Project Settings → Service Connections → New Service Connection → Azure Resource Manager and follow the prompts. - Store secrets and environmental variables in Azure DevOps Library as variable groups.

This will allow us to reuse the same yaml files for multiple use cases and environments, and will prevent us from hardcoding fixed values. Variables stored in a variable group can be used as environmental variables during execution on Azure DevOps pipelines (or equivalent variables can be managed using secrets or environment files in GitHub Actions workflows).

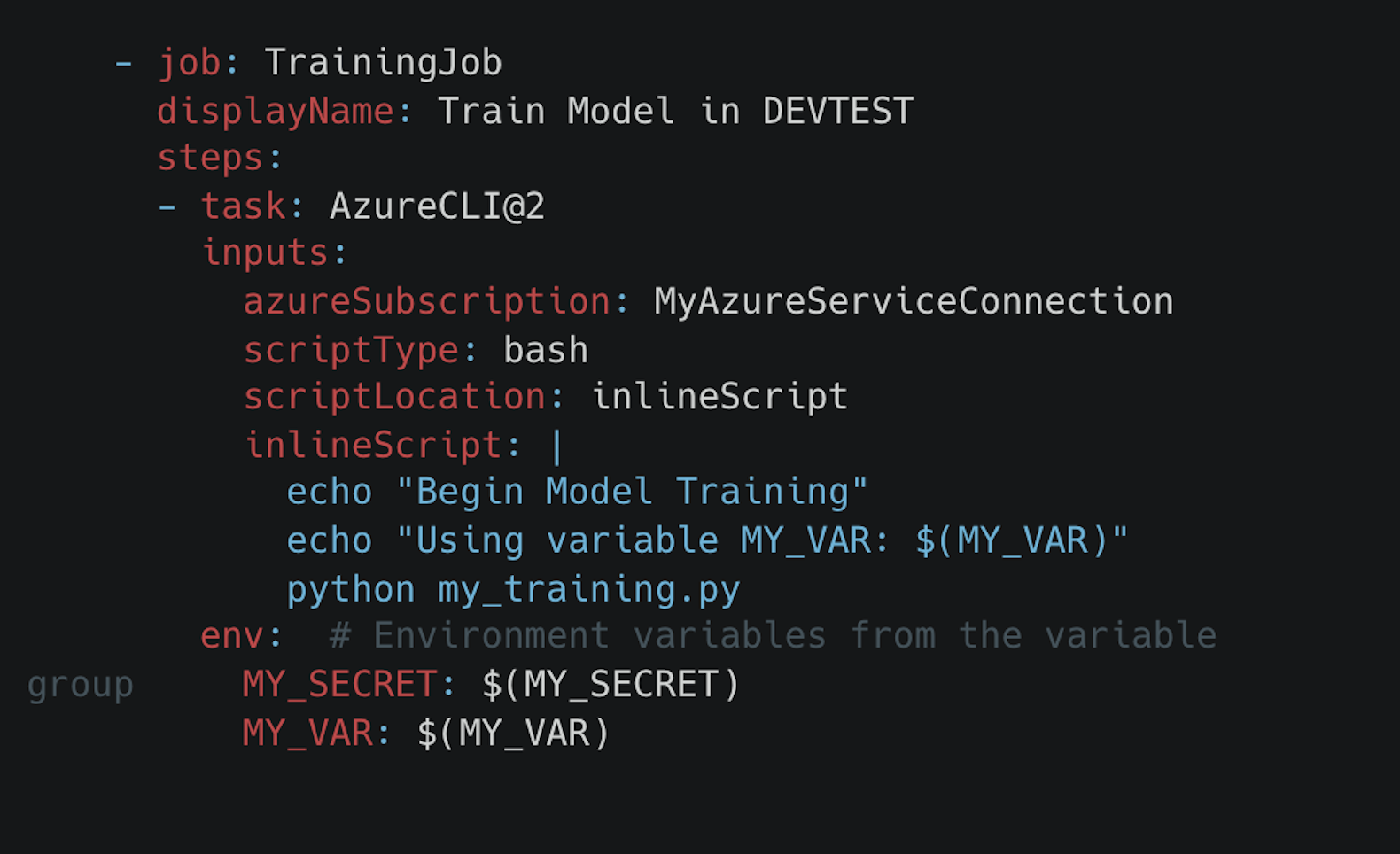

Azure DevOps Pipelines to automate MLOps on Azure AI

Use AzureML CLI and/or SDK v2 to create your training, deployment and invocation logic.

Then, chain those together as an Azure DevOps job that trains, deploys the model and invokes the endpoint.

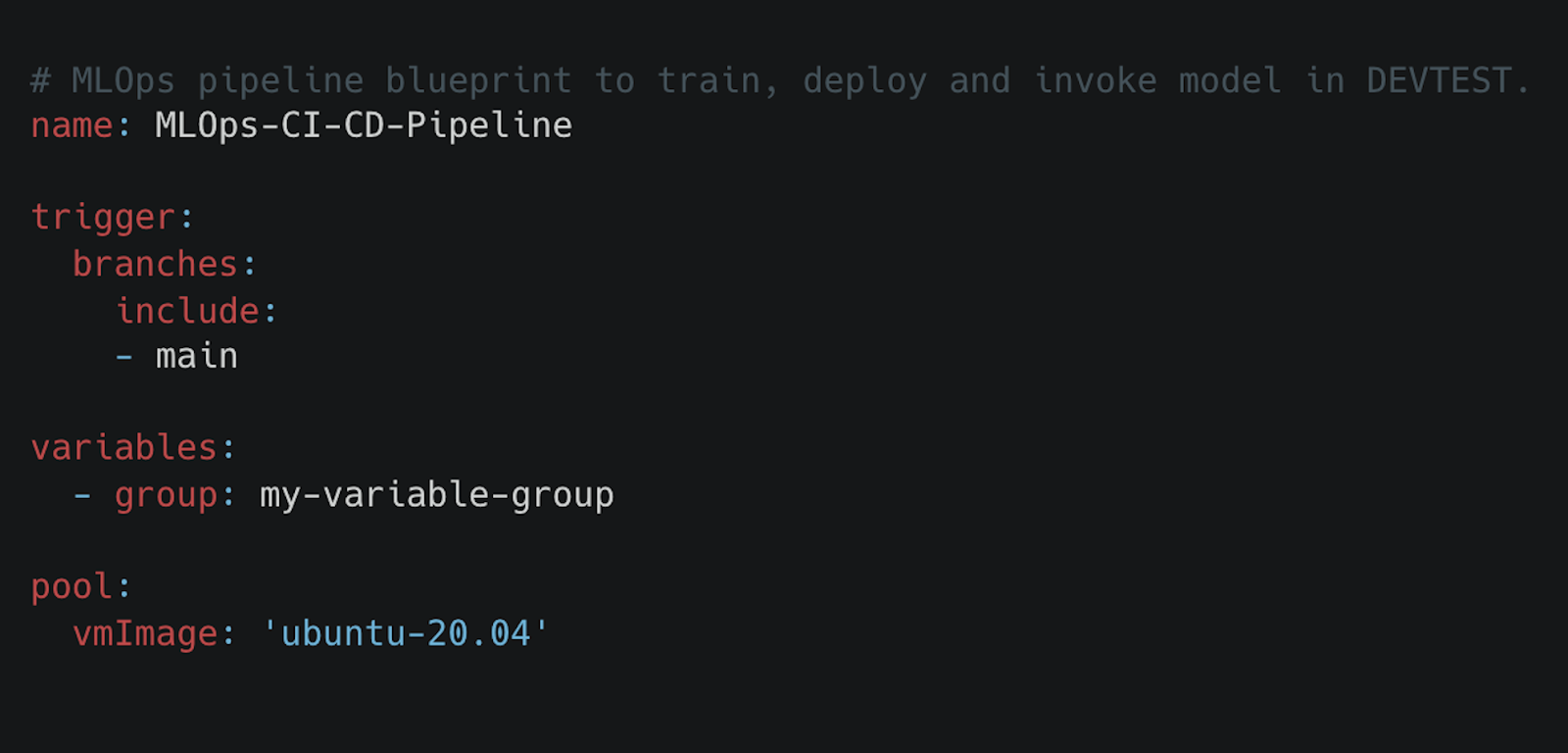

- MLOps-CI-CD-Pipeline serves as an identifier for the pipeline in Azure DevOps.

- trigger configures the pipeline to automatically run whenever there are changes pushed to the main branch of the repository.

- my-variable-group references a variable group that contains predefined values (e.g., secrets, configuration settings).

- ubuntu-20.04 specifies the agent pool and virtual machine image to be used for running the pipeline.

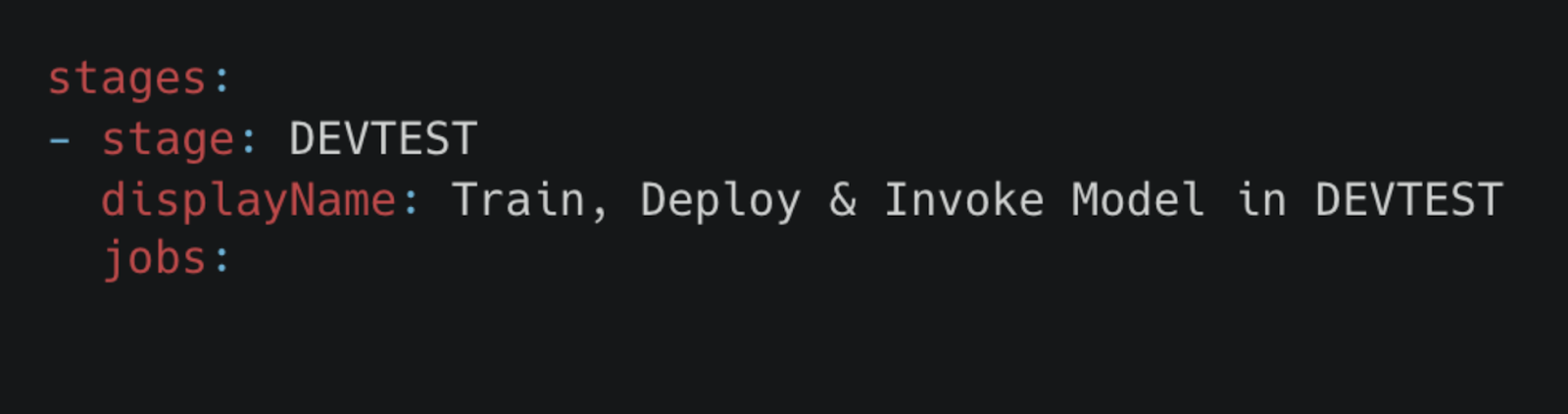

- DEVTEST is assigned the internal identifier to the stage.

- jobs defines the set of jobs to be executed within this stage.

- task a set of commands to be executed using the AzureCLI task with a specified Azure service connection.

- my_training.py - Python script to be executed that submits training job to Azure ML.

- env values pulled from the pipeline's variable group. Variables are passed as environment variables to the script, making them available during the execution of the training job.

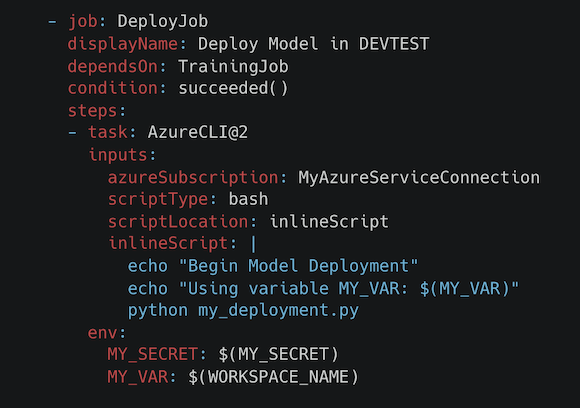

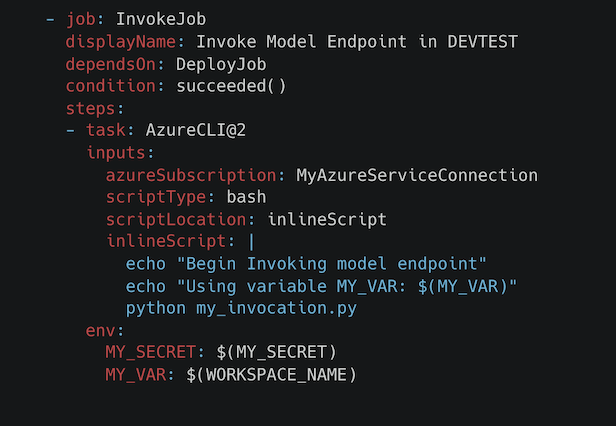

- dependsOn: TrainingJob condition: succeeded() specifies that the DeployJob depends on the successful completion of the TrainingJob.

- task as before, a set of commands to be executed using the AzureCLI task with a specified Azure service connection.

- my_deployment.py Python script that handles the deployment of the trained model in Azure ML. Here we could also write an Azure CLI command.

- InvokeJob follows the same pattern: it depends on the previous job, executes a python script or cli command that invokes registered model and utilizes environmental variables and azureSubscription.

Other than train, deploy & invoke, other jobs can be added as well, such as running tests or promoting model artefacts to higher machine learning environments.

In order to block pull requests until the Azure DevOps pipeline is successful, you can add Build Validation to your repository. This will ensure that on the main branch there is only the code that passed the smoke test.

Data drift monitoring in production

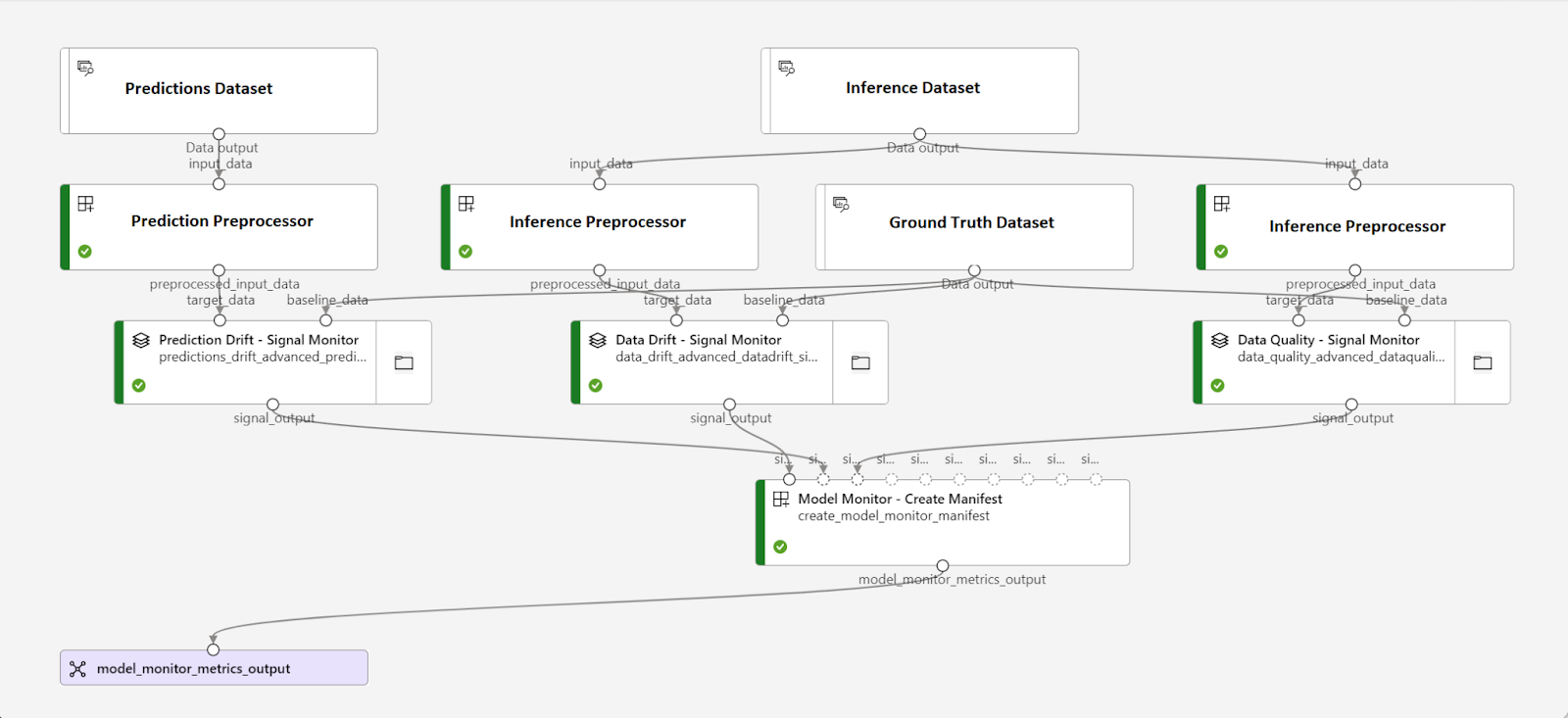

In order to monitor the quality of both model predictions, and inference data, as well as current production model performance, best practice is to set up Data Drift Monitoring. It consists of signals (currently 7 signals to choose from) that check for drift respective to the model objective (regression, classification, NLP, genAI) and chosen metric.

Data drift monitoring works by performing statistical computations on streamed production inference data and reference data. Reference data, or Ground Truth data is a dataset that we need to specify as the baseline set of data that represents the ideal behaviour of your model's input features and outputs. It is the dataset used to monitor whether new incoming data (or model predictions) significantly deviate from what the model was trained on or evaluated with. Typically a model's training data can be used as a reference dataset.

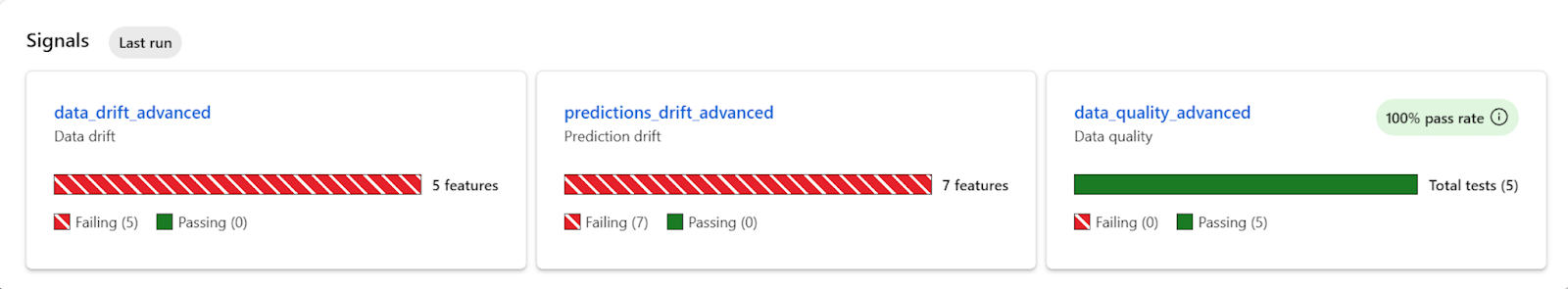

In the example below, data drift monitoring was set up using 3 signals: Prediction Drift Signal, Data Drift Signal and Data Quality Signal.

Think of a signal as a "sensor" for your model's health or data quality. Just like a thermometer measures temperature, a signal computes the distribution of inference or prediction datasets. You can choose the metric(s) used by the signal and its value.

Let’s sum up exemplary drift monitoring with its 3 signals:

- Prediction Drift Signal - computes distribution of Predictions dataset in regards to Ground Truth Dataset. I chose Jensen-Shannon Distance as a metric, and set it up to 0.01.

- Data Drift Signal - computes distribution of Inference dataset in regards to Ground Truth Dataset. I chose Jensen-Shannon Distance as a metric, and set it up to 0.01.

- Data Quality Signal - checks data integrity of Inference Dataset by comparing it to Ground Truth Dataset. I chose Null value rate as a metric, and set it up to 0.01.

Data drift monitoring jobs can be set to run on schedule. After the job runs successfully, we can see in the Monitoring tab its results. In this case, data drift was detected by the first 2 signals - meaning that my inference and predictions data didn’t have as close distribution to the reference dataset as I would like them to. On the other hand, data seems to be free of nulls as it passed the quality check, that is the 3rd signal.

Summary

MLOps is a critical framework for managing the lifecycle of AI/ML projects, addressing challenges such as data management, model versioning, and deployment. As highlighted in the discussion on modern challenges in AI/ML management, without a structured approach, teams struggle with inefficiencies, leading to delayed or failed deployments. The Azure MLOps ecosystem provides a comprehensive environment to tackle these issues, offering seamless integration with tools like MLflow for experiment tracking, Azure Blob Storage for scalable data management, and CI/CD pipelines for automating model training and deployment. A key challenge in MLOps, as noted earlier, is ensuring data quality, as poor-quality datasets can significantly degrade model performance. This is mitigated using data drift monitoring and dataset versioning with Azure ML, which enables tracking changes in data over time. Similarly, model deployment must balance real-world performance and latency requirements, an issue addressed by choosing between batch and online inference strategies. The importance of automation in MLOps workflows, emphasized in the CI/CD tutorial, ensures that retraining, deployment, and model validation are continuous, reducing manual effort and improving reproducibility. By leveraging these best practices, organizations can build scalable, resilient AI/ML pipelines, enhancing efficiency while minimizing risks associated with model performance degradation and infrastructure management.

.png)

.avif)