Turn models into measurable business value - consistently and at scale

We design and implement enterprise-grade MLOps platforms for your data science teams. Build and deploy models faster, operate them safely, and control costs across Google Cloud Platform, Azure and Databricks.

Why MLOps?

In modern organizations, the challenge is not training a single model - it’s operationalizing dozens of them across teams, environments, and regulatory boundaries. Ad‑hoc scripts, manual handoffs, and “best-effort” monitoring don’t scale.

We build reproducible, governed, and cost‑efficient ML lifecycles so your data scientists can focus on innovation while the platform ensures:

Consistent deployments

standardized CI/CD for models, features, and data pipelines

Observability by default

continuous tracking of data drift, quality, and performance SLAs

Compliance and auditability

versioning, lineage, approvals, and access controls

Cloud efficiency

FinOps guardrails, autoscaling, and right‑sizing from day one

Learn more about the MLOps solutions on our blog.

Why us?

We deliver large‑scale data & ML platforms in regulated and high‑throughput environments.

Banking & fintech

credit scoring and transaction classification on Databricks with full MLOps, API serving, and governance

E‑commerce

customer data platforms, recommendations, real‑time analytics

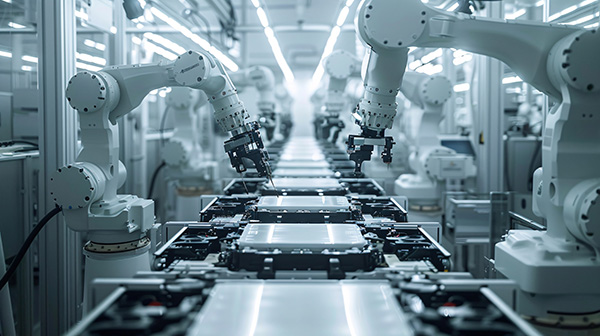

Manufacturing

computer vision at the edge integrated into central MLOps

Our team of platform architects and data engineers has built and scaled enterprise ML platforms across highly regulated and data-intensive industries. We’ve migrated legacy Hadoop clusters to modern AI-ready cloud lakehouses, delivered governed MLOps for financial institutions, and enabled real-time ML for global e-commerce - we know what it takes.

Datumo as the official Partner of Google Cloud, Microsoft Azure, and Databricks combines deep technical expertise with direct access to the latest platform capabilities and best practices. Our approach is pragmatic: we know how to design MLOps that data scientists want to use, and that IT and compliance teams can trust.

Core capabilities

- Reference architectures for Azure ML / Databricks / Vertex AI

- Secure landing zones: private endpoints, firewalls, VNET/VPC peering, IAM policies

- Data lakehouse integration (Delta/Parquet), catalog, and governance

- Model, data, and feature pipelines with Azure DevOps, Cloud Build or GitHub Actions

- Reproducible training with MLflow/Vertex AI Pipelines; artifact and model registries

- Promotion across dev → test → prod with approvals and automated checks

- Feature Store (Databricks Feature Store) with online/offline parity

- Data quality and contracts (Great Expectations, Lakehouse table constraints)

- Reusable datasets and notebooks with clear ownership and SLAs

- Real‑time and batch serving (Databricks Model Serving, Azure ML endpoints, Vertex AI)

- Scalable API gateways, A/B and canary releases, shadow deployments

- Hardware acceleration where justified; cost/perf tuning baked in

- End‑to‑end observability: latency, throughput, error budgets, model metrics

- Data & model drift detection, automatic alerts, retraining hooks

- Integration with Prometheus/Grafana/Azure Monitor, incident playbooks

- Lineage from data to model to endpoint; immutable versioning

- Approval workflows, role‑based access, policy‑as‑code

- Audit‑ready reports for regulated industries

- Workload tagging, budgets, and cost allocation per team/project

- Autoscaling, spot/preemptible where safe, intelligent cache & IO optimizations

- Continuous cost/performance reviews and recommendations

Reference tech stack

Engagement model

Discovery & Assessment (2–4 weeks)

current state, gaps, quick wins, TCO

MVP Platform (4–8 weeks)

core CI/CD, registry, serving, monitoring

Scale‑out (ongoing)

add teams, domains, real‑time, governance hardening

Run & Improve

optional managed service with SLOs and cost reviews

Deliverables: architecture & IaC, runbooks, playbooks, dashboards, training for DS/ML engineers, and a roadmap aligned with business priorities

Benefits

MLOps Platform Solutions

FAQs

No. We support Azure, Databricks, and GCP - including hybrid and multi‑cloud.

Yes. We onboard existing notebooks / scripts into pipelines with tracking, testing, and serving.

Policy‑as‑code, audit trails, RBAC, lineage, and controlled promotions are standard.

Budgeting, tagging, right‑sizing, autoscaling, and regular cost/perf reviews.

Fill out the form and receive the no-obligation consultation

Have a question or want just to talk?

Maciej Kępa

Follow us on social media: