Introduction

In the realm of big data processing, Apache Spark stands as a robust framework, enabling data engineers and analysts to efficiently handle massive datasets. Introduced in Spark 3, Adaptive Query Execution (AQE) is a pivotal component within Spark, driving the optimization of query performance. Central to AQE are its configuration parameters, which play a crucial role in shaping query execution. In this article, we will embark on an exploration of the AQE-related Spark SQL configuration parameters. We will uncover how these parameters influence query performance and delve into their significance in fine-tuning Spark applications. A comprehensive understanding of these parameters is essential for unlocking the full potential of Spark's query optimization capabilities.

Skew join optimization

The 'spark.sql.adaptive.skewJoin.enabled' property is a key component of the tools available in Apache Spark that help optimize the performance and efficiency of SQL queries. Its main purpose is to deal with the issue of skewed data joins, which often lead to extended execution times and resource wastage. Skewed joins occur when one or several data partitions are significantly larger than the others, disrupting the even distribution of the computational load.

Enabling the 'spark.sql.adaptive.skewJoin.enabled' property in Spark's Adaptive Query Execution (AQE) enables dynamic optimization of joins during query execution. This allows the AQE mechanism to detect situations where data is skewed and take appropriate steps to minimize the impact of such skewed joins on performance.

Adaptive Query Execution (AQE) can effectively address the issue of skewed joins by taking actions such as dynamically splitting skewed data into smaller parts, changing join strategies on the fly, or adjusting the size of data partitions based on observations during application execution. This empowers Apache Spark users to achieve significant performance improvements and minimize the impact of skewed data on their processing.

Skewed partition factor and threshold

Regarding optimization of skew joins, there are two properties which allow for precise customization of the system's behavior to handle data skew. Those properties are “spark.sql.adaptive.skewJoin.skewedPartitionFactor” and “spark.sql.adaptive.skewJoin.skewedPartitionThresholdInBytes”.

The property spark.sql.adaptive.skewJoin.skewedPartitionFactor is used to determine a factor that serves as the threshold above which partitions are considered skewed. If the number of records in a given partition exceeds the value calculated from the formula (skewedPartitionFactor * average row count in partition) the partition is recognized as skewed. For example, setting

spark.conf.set("spark.sql.adaptive.skewJoin.skewedPartitionFactor", 3)

implies that a partition will be deemed skewed if the number of rows in the partition exceeds three times the average number of records in that partition. On the other hand, spark.sql.adaptive.skewJoin.skewedPartitionThresholdInBytes is employed to specify the maximum partition size beyond which partitions are marked as skewed. It monitors progress during join execution and analyzes partition sizes. By setting

spark.conf.set("spark.sql.adaptive.skewJoin.skewedPartitionThresholdInBytes", 128 * 1024 * 1024)

partitions exceeding 128 MB are considered skewed.

Both of these properties work synergistically, controlling imbalances during "join" operations. In the event of detecting skewness, Spark SQL AQE can apply optimization strategies such as dynamic reordering, dynamic resizing of partitions, dynamic broadcasting, or additional partitioning. This adaptive mechanism enables Spark to effectively handle variations in data distribution, resulting in enhanced performance and optimal resource utilization during data processing.

Dynamically coalesce shuffle partitions

In the world of Apache Spark's Adaptive Query Execution (AQE), one of the invaluable parameters you may encounter in the Spark SQL configuration is "spark.sql.adaptive.coalescePartitions.enabled" This parameter addresses critical challenges related to optimizing the performance and resource utilization during shuffle operations.

When processing vast datasets, finding the right balance in the number and size of shuffle partitions is crucial. If the number of partitions is set too low, each partition can become unmanageably large, requiring excessive disk I/O and leading to performance bottlenecks. On the other hand, if the number of partitions is excessively high, the size of each partition may become too small, causing network I/O issues and slowing down job execution.

The "Dynamically coalesce shuffle partitions" property acts as a dynamic solution to these problems. It enables Spark to adjust the number of partitions on-the-fly, striking the perfect balance between partition sizes, ensuring efficient data movement, and minimizing resource waste.

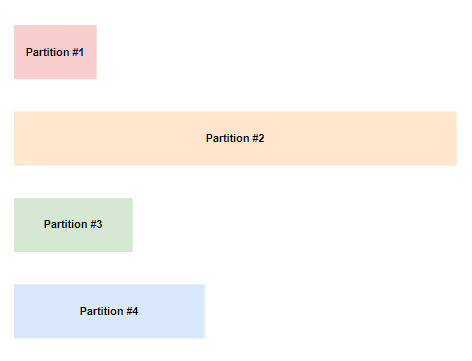

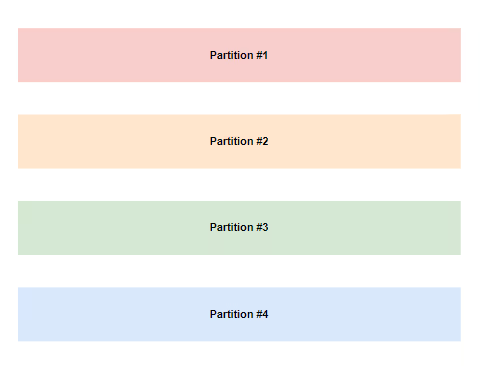

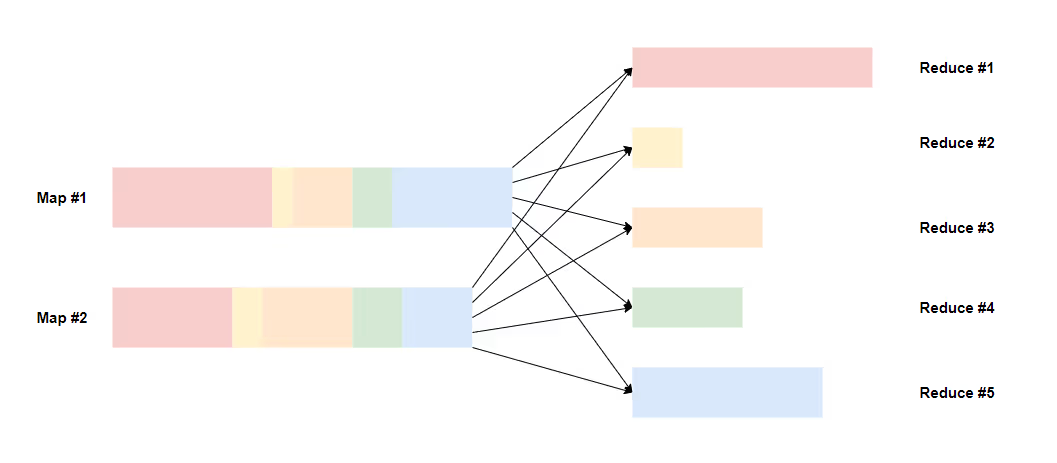

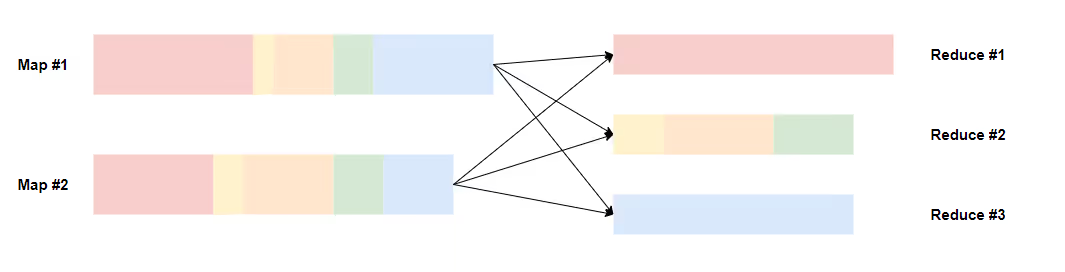

The pictures below illustrate how we can decrease “reduce” operations.

Partition number

Within the domain of Adaptive Query Execution (AQE) in Apache Spark, the "spark.sql.adaptive.coalescePartitions.initialPartitionNum" property proves to be a valuable tool for effectively managing the initial number of partitions during data processing. Tailoring the number of partitions at the start can significantly influence performance and resource utilization. With the customization of "spark.sql.adaptive.coalescePartitions.initialPartitionNum," users gain the flexibility to dynamically set the optimal number of initial partitions based on their data volume and cluster resources. This adaptive capability ensures that Spark initiates data processing with partitions of the appropriate size, thereby reducing the risk of having either too few or too many partitions. This level of control results in enhanced data processing efficiency and maximized resource usage. Whether you're working with extensive datasets or resource-limited clusters, this property offers a means to strike a precise balance, ultimately optimizing your Spark applications.

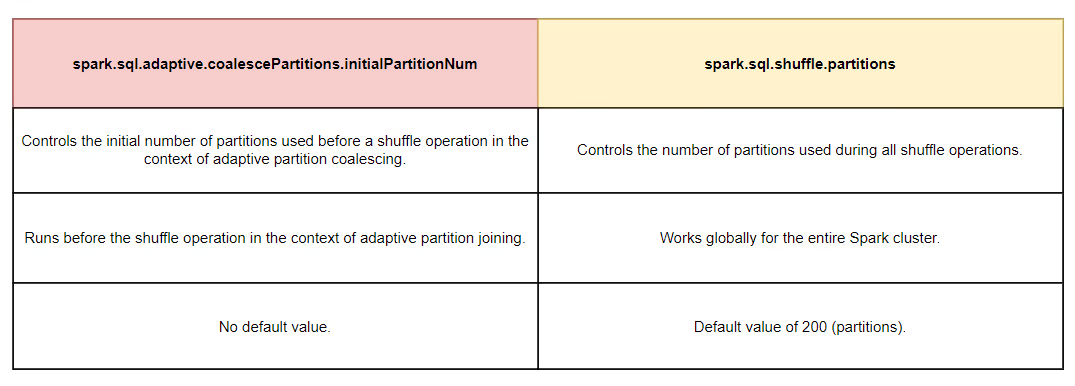

Regarding the partition number it is worth mentioning the “spark.sql.shuffle.partitions” property. The property spark.sql.shuffle.partitions assumes a central role in this optimization effort, influencing the number of partitions during shuffle operations like groupByKey or reduceByKey, which entail redistributing data across Spark executors. It dictates the extent to which partitions are divided during shuffle, defaulting to 200 if not explicitly defined for a specific operation. For clusters handling substantial data loads, augmenting the partition count can promote more even load distribution and accelerate shuffle tasks. However, a word of caution is warranted, as an overly high partition count may trigger unnecessary data movement, potentially leading to a decline in performance. It is advisable to experiment with diverse values for this property, finding the optimal balance that aligns precisely with specific processing requirements.

The table below shows the main differences between these properties.

Advisory partition size

This parameter plays a significant role in determining the advisory partition size in bytes for shuffle partitions during coalescence operations. The coalescence operation aims to merge contiguous partitions to achieve a more optimal size.

It's worth noting that the configuration spark.sql.adaptive.advisoryPartitionSizeInBytes comes into effect when both spark.sql.adaptive.enabled and spark.sql.adaptive.coalescePartitions.enabled are set to true. Adjusting the advisory partition size directly impacts the optimization of Spark query execution. Smaller partitions can lead to increased parallelism but may result in higher overhead due to numerous small tasks. On the other hand, larger partitions may reduce task overhead but can lead to uneven load distribution.

Striking the right balance through advisoryPartitionSizeInBytes adjustment is crucial for performance optimization, especially in scenarios where data size and distribution vary.

By default, spark.sql.adaptive.advisoryPartitionSizeInBytes is set to 64 megabytes. However, this default value can be customized based on specific requirements. For instance, if you want to increase the partition size to 100 megabytes, you can use the following Spark configuration:

spark.conf.set("spark.sql.adaptive.advisoryPartitionSizeInBytes", "100m")

Parallelism first

The "spark.sql.adaptive.coalescePartitions.parallelismFirst" parameter plays a pivotal role in optimizing the parallelism of specific operations, such as join and groupBy, within the Apache Spark framework. The significance of this parameter becomes apparent when considering that different operations often necessitate varying degrees of parallelism, depending on factors like the data size and the available computing resources.

When set to true, the "spark.sql.adaptive.coalescePartitions.parallelismFirst" parameter makes Spark disregard the target size specified by "spark.sql.adaptive.advisoryPartitionSizeInBytes" (default 64MB) when coalescing contiguous shuffle partitions. Instead, it only respects the minimum partition size specified by "spark.sql.adaptive.coalescePartitions.minPartitionSize" (default 1MB), aiming to maximize parallelism. This approach is taken to prevent performance regression when enabling adaptive query execution. However, it is generally recommended to set this configuration to false and respect the target size specified by "spark.sql.adaptive.advisoryPartitionSizeInBytes" to achieve more predictable and controlled coalescing behavior.

Minimal partition size

The 'spark.sql.adaptive.coalescePartitions.minPartitionSize' property fulfills a crucial function within the Apache Spark ecosystem., offering a means to fine-tune partition optimization during data processing. With this property, users can specify the minimum partition size that must be preserved when adjusting partition counts to enhance processing efficiency. In practical terms, if the partition size already exceeds the predefined minimum threshold, it won't undergo further optimization, thus averting unnecessary data fragmentation.

A primary application of this property revolves around overseeing partition size within a Spark cluster. This ensures that the number of partitions doesn't balloon excessively, mitigating the associated overhead in partition management and preserving processing performance. Furthermore, governing the minimum partition size aids in judiciously leveraging cluster resources by staving off undue data fragmentation and maintaining larger partitions, thereby expediting processing tasks.

Summary

In the domain of big data processing, Apache Spark serves as a powerful platform for efficiently handling vast datasets. Adaptive Query Execution (AQE) plays a central role in optimizing query performance within Spark. This article explored AQE-related Spark SQL configuration parameters, underscoring their influence on query execution. It's essential to note that the parameters discussed here represent just a subset of the comprehensive AQE toolkit.

AQE's inherent value lies in its ability to dynamically adapt query execution, optimizing performance and resource utilization. By addressing skewed data joins and providing flexible controls for partition management, AQE simplifies complex optimization challenges. The benefits of embracing AQE extend to enhanced query performance, more efficient resource use, and automated optimization.

In conclusion, AQE is a valuable asset for Apache Spark users, and its configuration parameters empower users to tailor their applications for optimal results, simplifying the intricacies of query optimization in the realm of big data processing.

.png)