Despite being a cornerstone in the deployment and maintenance of machine learning models, MLOps definition has been clouded by confusion and debate. In this article, we delve into why do we need MLOps, its specifics, fundamental principles, and the role of automation. With these insights, we aim to demystify MLOps and emphasize its crucial role in streamlining machine learning projects.

The motivation for MLOps

During an episode of the Practical AI podcast in 2022, Luis Ceze, CEO and co-founder of OctoML, made a statement that sparked controversy: he claimed that MLOps is not real. His argument centered on the idea that we needlessly complicate machine learning model deployment process without properly addressing the core question of why it should be treated differently from any other software module. While Mr. Ceze's point is valid, I can assure you that MLOps is indeed real, but requires some clarification as there is still a lot of confusion surrounding this discipline. To understand its origins, we must first recognize that the tangible result of the model training process is a file (often a binary file) that contains weight values. However, this file is not a functional model that maps inputs X to outputs Y. We must create a software module that generates such a model from the saved weights and then create another software that will put this model into prediction service – a process known as model serving or model deployment. This is where the most popular MLOps definition comes in:

MLOps is a set of practices aimed at deploying and maintaining machine learning models in production.

DevOps vs MLOps

One issue with this definition is that if we consider the machine learning model simply as a result of software development process, we arrive at the definition of DevOps. The MLOps concept has caused a lot of confusion as it appears to have borrowed and attributed the definition of DevOps, leading to misconceptions. However, MLOps is no mere ripoff. Andrew Ng, a Stanford University professor and AI entrepreneur, in his Machine Learning Engineering for Production specialization defined MLOps as:

A discipline comprising a set of principles, tools, and best practices to create and maintain a machine learning project in its whole lifecycle.

MLOps encompasses more than just deployment and is a broader term than DevOps. It involves the entire life cycle of a machine learning project. Let's explore the specifics.

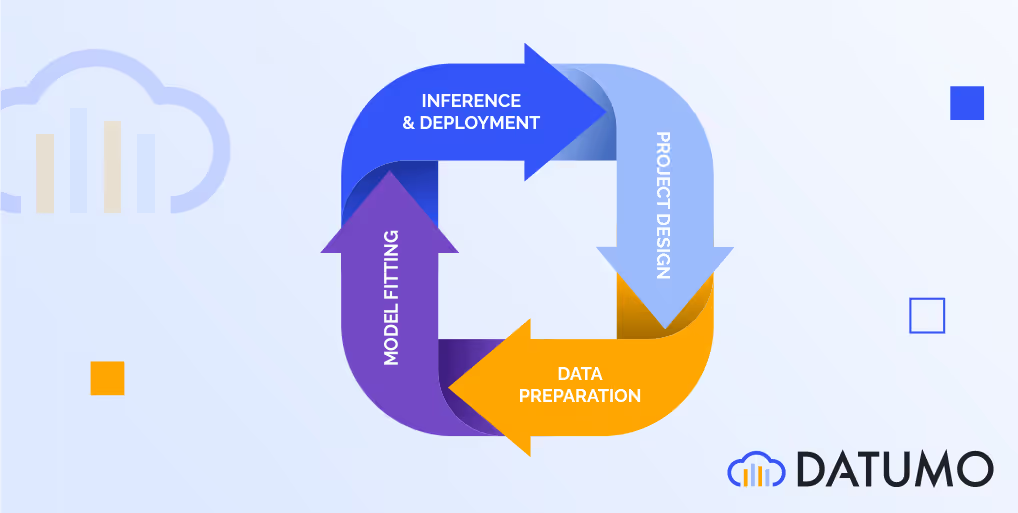

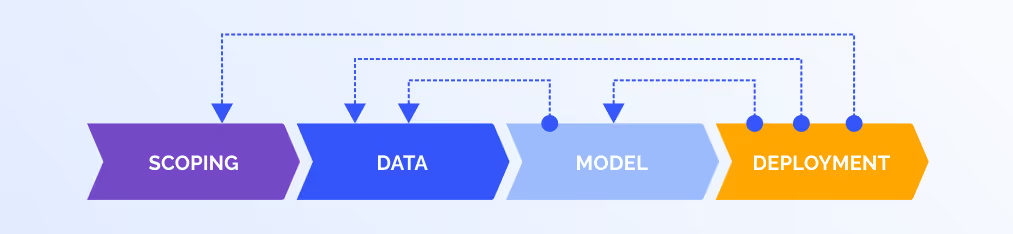

What is machine learning lifecycle?

While numerous studies have explored the lifecycle of machine learning systems, it is worth noting that different sources may divide it into varying numbers of phases. However, it is widely recognized that there are four key phases, based on consistently defined fundamental objectives.

Machine learning project design (scoping)

The purpose of this phase is to establish a clear understanding of all the necessary information from the start. It involves gaining a thorough knowledge of the use case, documenting initial requirements, and identifying key datasets needed to solve the problem which results in a potential risk reduction in the future. A data collection plan should be created by data teams if high-quality data is not available. Domain experts are essential for knowledge gathering in this phase, especially for complex projects such as in medical or engineering fields.

Data preparation

In the second phase, data teams focus on taking the path from source data to the input data that the model requires to be trained with. It is important to define the type of training data we will be working with before proceeding with later steps, including data analysis, data collection, data labeling, and data engineering (feature engineering). The labeling process should include clear conventions, best practices and training for the team responsible for these tasks. In order to tackle complex problems, it is recommended to establish a baseline, usually by preparing test datasets. A baseline can refer to a simple model, a particular method, or the outcome of manual work. It serves as a point of comparison, against which the subsequently developed initial models validation are performed.

Model creation

This phase aims to discover the best model that can effectively address the issue at hand. We can improve model quality by exploring different architectures, model training methods, and parameters in reference to the baseline created in the previous steps. During this phase, data science team trains and evaluates the model multiple times through various experiments, before selecting the final release candidate model.

Model deployment

The last phase of the cycle is where you deploy and manage models. Along with deploying machine learning production model, the model deployment step also covers functional (data distribution, live model performance) and operational (computing resource usage) monitoring and management of the entire MLOps platform.

The duration of the project phases varies depending on the project scope. For instance, I personally worked on a project that involved extending an existing model to solve a new problem in the medical field. The anticipated time frame for the initial cycle was two years. We accomplished scoping within three months. Data preparation consumed 18 months, while model creation required only a month. Deployment, on the other hand, took just a few weeks. This is an exceptional case that dealt with a complex medical issue, but the time distribution across the phases remains mostly the same. It's worth noting that some parts of the phases can occur concurrently when there's a large enough team. For example, we could begin collecting data in parallel to the scoping phase or prepare for deployment while developing the models.

For MLOps, the cycle part is crucial. This means that each of the phases mentioned earlier could occur multiple times throughout the project's lifespan. The typical cycle involves data > model > deployment. We only scope the project once at the beginning, and we keep the model updated with any possible changes. The data > model and model > deployment cycles may occur if something goes wrong and we need to reverse any changes. A full scoping > data > model > deployment cycle happens less frequently and only in cases where there are significant changes to the existing solution or when expanding it to new problems. The bottom line is that we can always go back and work in cycles, and we should do so to continually upgrade the project.

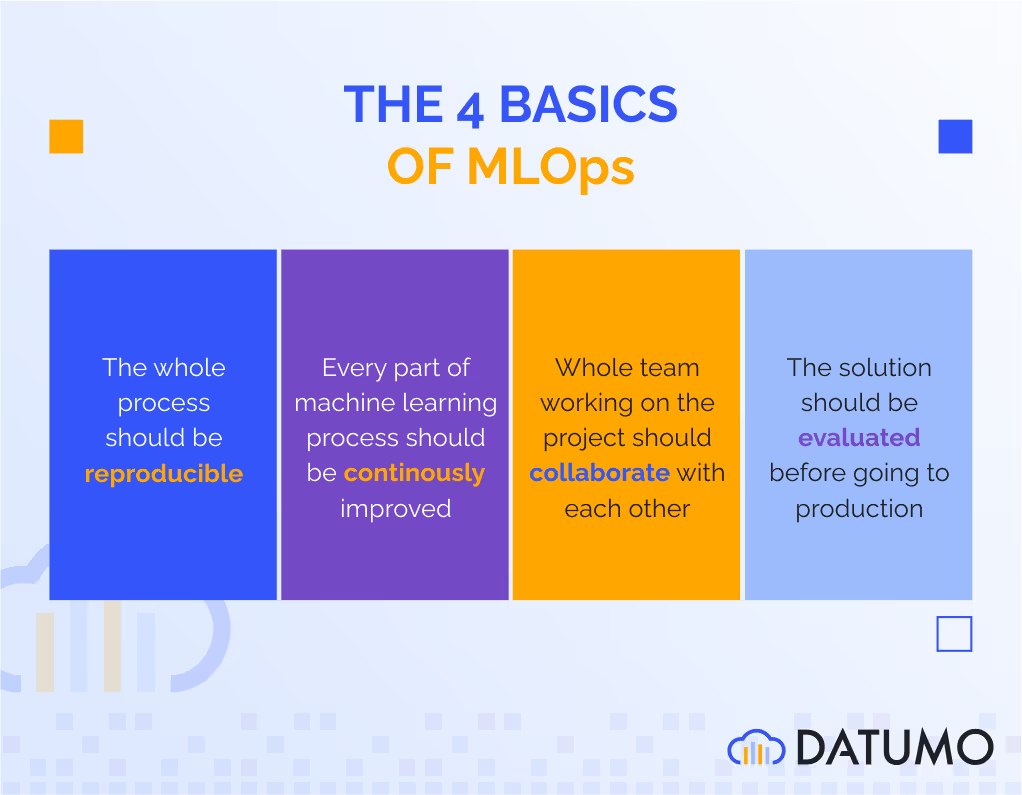

MLOps principles - key benefits of MLOps

Want to know more about the benefits of MLOps? First, it's important to discuss the fundamental principles. While there are various studies with different names for these principles, they generally fall into one of four categories. All of them can be directly considered as best practices for MLOps and therefore, include its key benefits.

Reproducibility

To ensure reproducibility in a machine learning system, all components must be easy to reproduce at every stage. This involves versioning not just the source code used for model development, but also the need to track model versions, data, experimental results, and documentation. The team should also follow standardized processes to facilitate maintenance, debugging, experimentation, and further project development.

Continuity

To ensure a successful machine learning project, it's important to continually develop every aspect to accommodate changing business needs, potential data drifts, and evolving standards. This includes not just retraining and updating the model version as a part of machine learning development lifecycle but also maintaining the code, upgrading underlying libraries, and refreshing or cleaning stale data. This principle is directly connected to the system lifecycle.

Collaboration

The team working on a machine learning project is multidisciplinary - usually consisting of machine learning engineers, data scientists, data engineers, software developers, and domain experts, all with different knowledge and skill sets. The collaboration principle means that we must create a transparent environment for all of them to work together and make it easy to share knowledge across the team.

Evaluation

The last and probably the most obvious principle. Evaluation is most commonly associated with model testing. Still, it applies to every other part as well - everything from data to code should be evaluated before going further into the lifecycle. Additionally, system monitoring falls under this rule, thus helping to ensure the best performance and early detection of possible system flaws.

When do we need to automate MLOps pipelines?

One of the stages of MLOps platform is to build machine learning pipelines. However, you may have noticed that all these rules and phases did not mention automation, which is often seen as a crucial aspect of MLOps. However, while automation does have a role in a mature MLOps system, it should not be seen as a critical component or a motivation for MLOps implementation in the early stages of a project.

If you are working with a small team and exploring a new field in a machine learning project, it might not be the most efficient use of your time and resources to develop fully automated pipelines for every aspect of the solution - model development pipelines, data pipelines, deployment pipelines etc. Instead, it is crucial to prioritize the key components of MLOps system. However, if you have multiple dedicated teams working concurrently or if you are in the final stages of the project and ready for production, it could be worth investing in a MLOps pipeline automation to streamline the process.

Standardization (falling under the reproducibility principle) is in fact the critical value that should drive the automation idea and process. Lack of standardization can lead to colossal project maintenance issues. Picture having multiple data scientists, each with their own code, computational resources, storage, and ML pipelines. Automation can address this challenge. A parallel solution would be to establish a centralized platform, such as Vertex AI by Google, Azure Machine Learning by Azure or Amazon Sagemaker by AWS, that offers (at a minimum) fundamental features like runtime code execution, data storage (feature store is an additional advantage), model registry and a dedicated team to manage this platform. What about hybrid MLOps infrastructure with multiple components of MLOps platform being provisioned as different cloud resources? It's more complex in terms of managing machine learning platforms but it can be a solution when there is a need for separation (e.g. dealing with private or sensitive data). This approaches ensures consistency throughout the project, increases reproducibility, and facilitates collaboration.

Why does MLOps matter?

MLOps may seem fairly complicated, since it is a relatively new concept that is still evolving and developing. However, if we closely examine its principles, we can see that MLOps practices emerged as an adaptation of good practices from DevOps, specifically tailored to the needs of the machine learning world. What is the basic use of MLOps in real world scenarios? Its primary goal is to simplify the lives of everyone involved in a machine learning project. Including MLOps in your project is highly recommended, as it will ultimately save you time and resources. Even in basic and well-documented scenarios within a small team, it's better to have a simple MLOps system than no system at all.

In today's fast-evolving tech landscape, MLOps is your key to maintaining a competitive edge. Stay tuned for more in-depth insights on this game-changing field in our upcoming articles, and unlock the full potential of MLOps for your organization.

.png)

.png)