Introduction to clustered tables

Contemporary data warehouses in the cloud enable the analysis of datasets at petabyte scale, creating an illusion of limitless scalability for end users. These tools make it possible for anyone to initiate the analysis of extensive datasets within minutes. The era of relying on highly specialised engineers' experience in operating dedicated Big Data tools appears to be a thing of the past, doesn't it? Yet, this perception would hold true if analysis incurred no expenses. Unfortunately, utilising these data warehouses comes at a significant financial cost.

Reducing the expenses associated with operating a data platform involves various strategies, ranging from fine-tuning data pipelines through micro optimizations to implementing larger-scale changes such as altering billing plans. Nevertheless, we believe that the essence of a data platform lies not just in constructing pipelines, but in effectively utilising the data emerging from the platform as a valuable data product. Let's explore how we can organise data on BigQuery, Google Cloud's native data warehouse.

BigQuery offers two approaches for organising table storage layout, partitioning and clustering. Partitioning is more commonly used, this method involves dividing tables into smaller subtables (partitions) based on factors such as row ingestion time or a DATETIME/INT64 column. Table partitioning is particularly effective with batch systems, where each batch job output typically corresponds to a single partition in the output table.

On the other hand, clustering, while less known, has the potential to significantly reduce query costs, not merely by a factor of two, but potentially by a hundredfold. Our team engages with BigQuery regularly, employed in tasks such as constructing new data products or designing data platforms for our clients. Today, we aim to share our knowledge and experience to dispel misconceptions associated with clustering.

What is clustering in BigQuery?

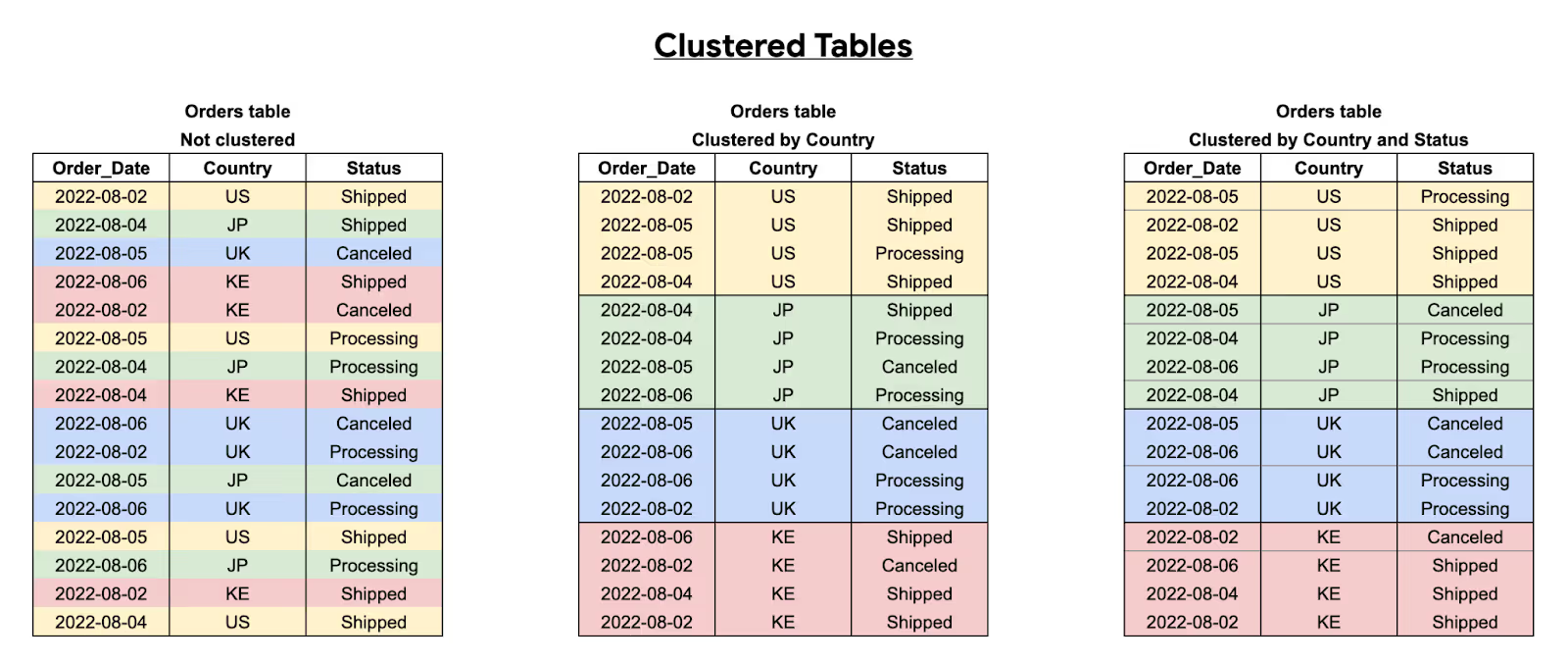

Clustering involves arranging table rows up to four columns, as illustrated in Figure 1. The order of these clustering columns is crucial, as they collectively establish a sorting key.

Source: https://cloud.google.com/bigquery/docs/clustered-tables.

Deep dive - how clustering works

Analysis typically involves selecting specific rows for examination. When tables are appropriately clustered, the relevant data is stored in close proximity. Using this property, BigQuery can deliver results more efficiently, leading to reduced query costs.

To illustrate, let's explore some examples using BigQuery's open datasets, focusing on analysing the popularity of Wikipedia pages as of 2024-01-01. In this scenario, we'll create three copies of the available data (refer to Figure 2): one without clustering, another clustered by title, and a third clustered by datehour.

CREATE TABLE demo.pageviews_20240101

AS

SELECT * FROM `bigquery-public-data.wikipedia.pageviews_2024`

WHERE DATE(datehour) = DATE('2024-01-01');

CREATE TABLE `demo.pageviews_20240101_by_title`

CLUSTER BY title

AS

SELECT * FROM `bigquery-public-data.wikipedia.pageviews_2024`

WHERE DATE(datehour) = DATE('2024-01-01');

CREATE TABLE `demo.pageviews_20240101_by_datehour`

CLUSTER BY datehour

AS

SELECT * FROM `bigquery-public-data.wikipedia.pageviews_2024`

WHERE DATE(datehour) = DATE('2024-01-01');Figure 2. A snippet of SQL code illustrating the configuration of tables, first without clustering and then with clustering using different fields.

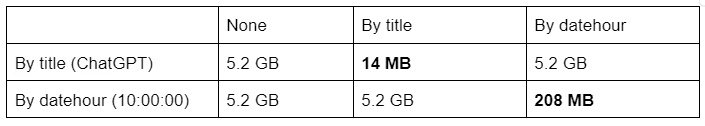

Subsequently, an assessment is carried out on the expenses associated with executing sample queries on the three tables. For this comparison, we employ two queries. The first query involves selecting the total page views of the "ChatGPT" article throughout the entire day (refer to Figure 3). In the second query, we seek statistics for all pages within a specific hour (refer to Figure 4).

SELECT datehour, title, views

FROM `demo.pageviews_20240101_by_title`

WHERE title = 'ChatGPT';Figure 3. Query for total page views of the article about "ChatGPT".

SELECT datehour, title, views

FROM `demo.pageviews_20240101_by_datehour`

WHERE datehour = '2024-01-01 10:00:00';Figure 4. Query for data from a specific day.

After examining the statistics of querying clustered tables (refer to Table 1), it becomes evident that clustering has significantly reduced the billed byte count. Notably, filtering by title has brought down the billed bytes from 5.2 GB to 14 MB. Through effective clustering, the query cost has been reduced by a remarkable 371 times. While some might consider this quite straightforward example, it has proven to be a significant solution for a substantial issue faced by one of our clients.

Cost reduction success: a case study on clustering in BigQuery

A client recently developed a new dashboard using Looker Studio, utilising a BigQuery table as the data source. The table was partitioned based on ingestion time, with new partitions added daily to capture updated information about user behaviour on a website. The table construction was optimised for reading specific days. However, the dashboard's intended use differed significantly; it aimed to display records corresponding to selected users throughout the entire historical data. The client approached us after realising that using the dashboard for just one day would incur a daily cost of approximately $175.

As you may have observed, their challenge stemmed from the necessity to scan the entire table when seeking records related to selected users, occurring each time Looker Studio executed a job on BigQuery to refresh results. We began brainstorming potential solutions. Initially, modifying the source table wasn't feasible as we lacked ownership rights. While the construction of the table was generally good, it didn't meet our specific needs. Ultimately, our solution involved scheduling a daily job to copy the historical data and save it as a table, organised with clustering by user (costing approximately $4 per day). The entire process of constructing the pipeline, from design to deployment, took us three hours. By implementing this approach, we successfully reduced the daily cost of utilising the dashboard to $1. While there was potential for further optimization by incrementally adding new rows in scheduled jobs, the client found the total bill of $5 a day wholly acceptable. With this relatively straightforward table clustering optimisation, we enabled our client to save $5 000 per month in expenses.

We need to delve deeper

We have already passed the “$5 000 toy example”. Now let's see why and how clustering helps with query execution.

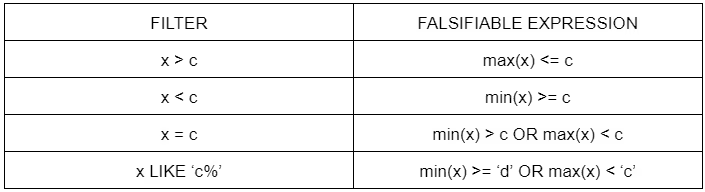

Primarily, the structure of a BigQuery table consists of blocks. Furthermore, BigQuery maintains metadata that outlines fundamental statistics about these blocks. A comprehensive exploration of the entire metadata management process is outside the scope of this article. As detailed in the article "Big Metadata: When Metadata is Big Data" [1], block metadata encompasses minimum and maximum values for each column. This information plays a crucial role in skipping selected blocks during query execution, a process known as "Block pruning," which is elaborated on with a clear and simple example in the BigQuery documentation.

In BigQuery, metadata is employed to construct "falsifiable expressions", which aid in excluding blocks from being read. If a function evaluates to TRUE, the corresponding block is skipped. Examples of such functions, as outlined in the article [1], are presented in Table 2.

Demystifying clustering misconceptions

Now let's review some common misconceptions about clustering in BigQuery.

I Block pruning only works for filters on clustering columns when querying tables

Applying filters to columns involved in clustering ensures the effectiveness of block pruning, but it is not obligatory. While clustering specifically defines the sorting key, metadata blocks are generated for all columns. To illustrate this, we utilised Covid-19 open data (refer to Figure 5). In this scenario, we constructed a table with the country code (clustering column) and the country name.

-- cost: 320 MB

CREATE TABLE `demo.correlated_values`

CLUSTER BY country_code

AS

SELECT country_name, country_code

FROM `bigquery-public-data.covid19_open_data_eu.covid19_open_data`;Figure 5. SQL code which creates the table which uses the country_code field as a clustering column.

We opted to use only the country code as the clustering column, excluding the country name from clustering. However, these two values exhibit a strong correlation. Sorting based on the country code also arranges the country name column accordingly. This correlation becomes evident when executing a simple select with a filter on the country name (refer to Figure 6). Although the estimate doesn't utilise clustering information and shows 240 MB for that query, the actual cost is only 39 MB. Remarkably, clustering remains effective even when a clustering column is not explicitly used.

-- estimate: 240 MB

-- cost: 39 MB

SELECT country_name

FROM `demo.correlated_values`

WHERE country_name = 'Poland';Figure 6. Reduced data consumption due to strong correlation between the coutry_name and coutry_code fields.

It is even better! That trick works with nested columns! Let's generate a fresh example featuring a new struct named "country data" which will contain both the country name and country code. In BigQuery, there's a technical limitation preventing the use of nested columns in clustering specifications. However, we can circumvent this by duplicating the column as a top-level one, thus enabling clustering (refer to Figure 6).

-- cost: 320 MB

CREATE TABLE `demo.correlated_values_nested`

CLUSTER BY country_code

AS

SELECT country_code,

STRUCT(country_name, country_code) AS country_data

FROM `bigquery-public-data.covid19_open_data_eu.covid19_open_data`;Figure 7. SQL code which creates the table with a nested column which uses a duplicated.

Although duplicating the column does result in added expenses, the benefits obtained from clustering may outweigh the extra cost. As depicted in Figure 8, applying clustering significantly improved query performance in BigQuery, despite applying filters on a nested field.

-- estimate: 240 MB

-- cost: 39 MB

SELECT country_data.country_name

FROM `demo.correlated_values_nested`

WHERE country_data.country_name = 'Poland';

Figure 8. Clustering also extends to nested fields, despite the lack of use of a clustered field.

II Clustering is like RDBMS index

In videos and blog posts discussing BigQuery clustering, one might encounter comments along the lines of "OMG! Why not just add a new index to that table? Clustering is called an index in my RDBMS." However, the reality is not that simple. While these developers may boast over a decade of experience with relational databases, they may not have encountered datasets surpassing 50 GB. Unlike non-clustered indexes in traditional relational database management systems (RDBMS), which are constructed as B-trees—a tree-like data structure—BigQuery operates on a distributed cluster for tables and metadata storage. Additionally, constructing a B-tree for tables at the scale of BigQuery would result in a massive object.

It turns out that for such large-scale datasets, a simpler metadata system with block pruning proves more effective. This approach can be parallelized across multiple workers, a feat that aligns well with the distributed nature of BigQuery's storage and processing architecture.

The only comparable index that comes to mind is the clustered index in MS SQL Server, frequently applied to the primary key, leading to a sorted order on disk space. In BigQuery, there is a similar concept, but with the flexibility for users to specify an arbitrary list of up to four columns for clustering.

Conclusion

The use of partitioning and clustering in Google BigQuery helps to effectively organise data within tables. Nevertheless, optimization in various systems adheres to a fundamental principle – "Less is better." We've explored the workings of clustering and offered insights into achieving optimal results. Additionally, we've dispelled common misconceptions and shared lesser-known tips and tricks about clustering. We used a real case to show how clustering can improve query performance in BigQuery.

If you have any questions or concerns while reading this article, feel free to contact us. With our daily engagement, we strive to assist our clients in maximising the advantages offered by BigQuery.

Sources

[1] Pavan Edara and Mosha Pasumansky. Big Metadata: When Metadata is Big Data. PVLDB, 14(12): 3083 - 3095, 2021. doi:10.14778/3476311.3476385

.avif)

.avif)